Exam 3 study guide

The one-hour study guide for exam 3

Latest update: Fri May 6 12:14:08 EDT 2016

Disclaimer: This study guide attempts to touch upon the most important topics that may be covered on the exam but does not claim to necessarily cover everything that one needs to know for the exam. Finally, don't take the one hour time window in the title literally.

Multicast

A unicast is a point-to-point transmission. The communications we looked at to date were unicast messages: a node sends a message to a unique destination. A broadcast is a message that is received by everyone on a network. We encountered this when we examined IP addresses. An IP address with all bits set to 1 is a limited broadcast address where the datagram is delivered to all nodes on the local area network but not forwarded by routers. A directed broadcast address where only host bits are set to 1 (the low-order bits that make up the host, as opposed to the network bits) delivers a datagram to all hosts on the specified subnet. Routers must may forward the datagram to that subnetwork.

A multicast is a message that is delivered to all members of a group. That is, it is delivered to all hosts that have subscribed to receive the multicast. These group members do not all have to reside on the same local area network. A simple way of implementing a multicast is by an n-way unicast. The sending host simply iterates over the list of destinations and sends a datagram to each destination. This is inefficient since the sender must transmit N times the traffic. Moreover, the sender needs to know all of the recipients.

It is more efficient – and desirable – to have routers replicate packets. With uncontrolled flooding, a host sends a single datagram that is then replicated by each router and sent over every other interface in that router. The problem with this approach is that any cycles in the graph formed by router connections will create a broadcast storm: a router will have receive a duplicate datagram, send it out on each interface, receive one or more of those duplicates at a later time, duplicate and send that, and so on.

A controlled flooding approach ensures that these cycles do not occur. Sequence number controlled flooding requires each multicast packet to have a sequence number affixed to it. Each router keeps track of the sequence numbers of datagrams that it has recently routed. If it receives a datagram with a new sequence number, the router sends a copy over each link except the one it came in on. If the router gets a packet with a sequence number that it has already seen, it discards the packet. Reverse path forwarding (RPF) is a technique that does not require adding new data to a packet. When a router receives a datagram, it looks at the datagram’s source address and then consults its routing table to see whether the datagram arrived on the link that corresponds to the route to the source (which will be the shortest path to the source). If it does, then the router forwards a copy of the packet onto every other link. If it does not, that indicates that the packet is a duplicate that arrived through a cycle. That packet is discarded. A multicast based on controlled flooding using RPF is called a source-based tree: the flow of multicast packets forms a tree that is rooted at the initial sender. With a source-based tree, each sender establishes a separate shortest-path tree for a multicast group. Since RPF is used, routers have to keep track of the sender of the multicast packet.

Another approach to controlled flooding is not to send a copy of the datagram onto every link in the router. Instead, we create an overlay network that is a subset of the full network. This overlay network is a spanning tree, which is a graph that contains all the nodes of the network but only a subset of the edges such that the graph is connected (all nodes are reachable) but there are no cycles.

One way of constructing a spanning tree is to pick a random node to serve as a rendezvous point (also known as a center node). Every node that wants to receive multicasts will send a join message to that rendezvous point. As each join message propagates through routers, each router records the incoming and outgoing interfaces as being associated with that multicast address. The incoming and outgoing links become part of the spanning tree. When all interested nodes have sent join messages, the spanning tree is complete. Any multicast messages will be forwarded only along the links that make up the spanning tree. This multicasting technique is called a group-shared tree since only interested edge routers beome part of the tree. With a group-shared tree, a single tree is shared among all senders for a multicast group; each sender has to send its multicast packets to a common rendezvous point in the exact same manner as if it was sending unicast messages. Unlike a source-based tree, routers do not need to make routing decisions based on the sender’s address for a multicast destination.

IP multicast routing

IP multicast is designed, like IP, to be decentralized and span multiple physical networks. Membership is dynamic: a machine can join or leave a multicast group at any time. There is no central coordinator and no restriction on the number of hosts that can be in a group. Multicasting provides network efficiency. Datagrams in a multicast stream only need to be replicated when a router needs to send them to multiple network links. A sender transmits only a single stream of datagrams and only one stream of datagrams is ever needed on any network segment regardless of the number of receivers.

An IP multicast address (also known as a class D address) is an IP address that starts with the bit pattern 1110 and contains a 28-bit multicast group ID. With IPv6, a multicast group address starts with the bit pattern of eight 1s (1111 1111) and contains a 112-bit multicast group ID (the other buts are used for flags and to indicate scope; we will not cover that here). A host may join any IP multicast address and receive messages addressed to that multicast ID. The collection of systems that are interested in receiving multicast messages from a specific multicast group is called a host group.

Routers have to get involved to support multicasting beyond the local area network. Two protocols are used to implement multicasting. The Internet Group Management Protocol (IGMP) is designed for hosts to inform routers that they are interested in joining a multicast group. The Protocol Independent Multicast (PIM) protocol enables routers to tell their neighboring routers that they are, or no longer are, interested in receiving packets for a particular multicast group.

A host uses IGMP to send a multicast report message to join a specific multicast group. A multicast-aware router will get this message and now know that the link on which the message arrived needs to receive any packets addressed to that multicast group. Periodically, a router will send a membership query, asking hosts what multicast groups they want to receive. If no host on a network link responds, the router will assume that nobody on that LAN is interested in receiving multicast packets. If a host is interested, it has to re-send a multicast report. This technique of requiring renewals and deleting a subscription if no renewal is received is called soft state. A host may send an explicit leave group message to state that it is no longer interested but the soft state mechanism ensures that multicasts will not be sent onto the network even if that does not take place (if the machine dies, for example).

IGMP allows routers to know what multicast groups the nodes on its connected LANs are interested in. PIM, Protocol Independent Multicast, is responsible for conveying membership information among routers. It assumes the presence of other protocols to know the network topology and which routers are connected together. There are two approaches to multicasting on the WAN (wide-area network): flooding and sparse-mode multicast.

Flooding, also known as Dense Mode Multicast, uses a source-based tree. That is, all multicast traffic originates from the source and the message is duplicated at a router and sent to all of its connected routers. Each of those routers, in turn, duplicates and sends the message to all of its connected routers, and so on. Reverse path forwarding (RPF) is used to ensure that no forwarding cycles arise. PIM Dense Mode floods the entire network of connected multicast-aware routers. A router stops forwarding traffic only when it receives a Prune message from a router telling it that the router does not want the multicast traffic for that address. It does this if its downstream routers are not interested in that multicast stream. If a node on a LAN joins a multicast group at a later time and sends an IGMP message to a router, that router would then send a PIM Graft message to its connected routers to state interest in the stream. Dense mode only makes sense when there are interested receivers spread through most locations covered my multicast-aware routers. It is rarely used.

In contradistinction to Dense Mode, PIM Sparse Mode Multicast starts with requests from multicast receivers rather than flooding the network with traffic from the sender. Each multicast group must be associated with a router known as a rendezvous point. Edge routers that are interested in receiving a multicast group send join messages to that rendezvous point. This builds a spanning tree between the rendezvous point and the subscribers. This rendezvous point acts as a central point that senders route to when they are transmitting multicast streams. The advantage of PIM sparse mode multicast is that packets go only where needed.

Data Link Layer

The data link layer is concerned with sending and receiving data on the actual communication links that connect machines. These are the links that constitute a local area network (LAN). Packets at the data link layer are called frames. Medium Access Control (MAC) is the general term for the protocol that is used for transmitting and receiving link-layer frames. Interfaces at the link layer use link-layer addressing. A MAC address (for example, an Ethernet address) is different from, and unrelated to, an IP address. An Ethernet MAC address is globally unique to a device and there is no expected grouping of such addresses within a local area network. IP addresses on a LAN, on the other hand, will share a common network prefix.

Error Detection & Correction

MAC protocols usually employ error detection codes and sometimes employ error detection and correction codes. We’ve seen that IP used error detection codes to validate an IP header and both TCP and UDP used them to validate their headers and data. Why do we need this at the link layer? The basic advantage of detecting errors at the link layer is to catch bad frames early and avoid the overhead of propagating a useless frame up the network stack. If the firmware on a network card can detect an error, it can drop the packet and not even waste time interrupting the processor. If error correcting codes are used, the link layer now has the opportunity to correct a limited amount of bit errors in the received frame. This can avoid the costly delay of having to retransmit a packet.

Parity

The simplest form of error detection is parity. With parity, we add one bit to a block of data, called a parity bit. With even parity the parity bit is set such that the total number of one bits in the block of data (including parity) is an even number. With odd parity the parity bit is set such that the total number of ones is an odd number. Parity is simple to compute and requires a low overhead both in terms of storage and computation. However, it cannot detect an even number of bit errors (one error cancels the other). Moreover, in networks, bit errors typically occur in bursts, not single bits, so parity is a poor choice for this kind of application. Parity is popular in applications where errors rarely happen and, when they do, are usually single-bit errors. Memory chips are an example where parity codes are useful.

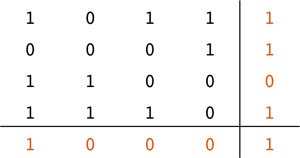

Two-dimensional parity

The use of parity is based on the assumption that errors are rare and multi-bit errors are even more rare. We can arrange a sequence of bits into a two-dimensional M × N array. Then we generate a parity bit for each row and another one for each column. Finally, we generate a parity bit for the intersection of the row and column parity bits. To validate data, we check the parity along each row and each column. If there is a single bit error in the data, the intersection of the row and column with parity errors will identify the bad bit.

Two-dimensional parity, which can be easily extended to n dimensions, is a simple example of an error correcting code (ECC). Error correcting codes were pioneered by Richard Hamming in 1950 and there are numerous such codes, multi-dimensional parity being one of the simplest. A data transmission that uses error correcting codes is said to use forward error correction (FEC).

Checksums

A checksum is any form of code that is uses the data to compute a small, fixed-size value that is sent along with the data and is used for error checking. The receiver can apply the same computation to validate the checksum. Any bit errors in the data will yield a high probability that the computed checksum will be a different value. We already examined a simple checksum earlier. Protocols such as IP, UDP, TCP, ICMP, OSPF, and IGMP all use the Internet checksum. In this checksum, we summed up the data 16 bits at a time, adding one whenever the sum of two values resulted in a carry (overflow), and inverting the bits of the result. The Internet checksum is extremely easy to compute put provides poor detection of errors in the data.

Cyclic redundancy check

A cyclic redundancy check (CRC) is a much more robust checksum that is particularly good at detecting multiple consecutive bad bits. An n bit CRC code will generally detect a burst of up to n bad bits. CRC codes are a type of code known as a linear block code. A block code treats the data as a sequence of fixed-length integers. The Internet checksum is also a form of a block code. A CRC is a bit more computationally intensive to compute than an Internet checksum but, when done at the MAC layer, is generally done by the hardware of the adapter, and therefore does not take time from the processor.

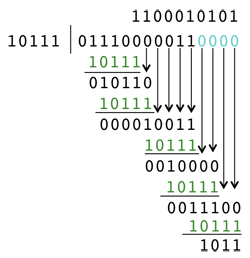

A CRC computation is similar to long division but with the use of exclusive-ors instead of subtraction. The entire data to be checksummed is treated as a large binary number that is left-shifted by the desired length of the CRC code (e.g., r bits). This number is “divided” by a value called the generator (abbreviated by G). The generator is pre-defined and agreed to by both sides. For an r-bit CRC code, the generator must be r + 1 bits long, start with a 1 and be an odd number (end with a 1). The “division” is a series of exclusive-ors on the data, aligning G with the leftmost 1 in the remaining data, exclusive-oring the result, and repeating until there is no more room to position r bits under the data. What’s left is the remainder, which is the CRC value.

The figure on the right shows a CRC computation with a Generator of 23 (10111) yielding a remainder of 11 (1011), which becomes the checksum. The data is sent with the CRC number in place of the 0 bits that filled the data on the right when we left shifted the data by r bits. The recipient performs the same division without left shifting the value it receives. If the remainder is 0, then this indicates that there is no data corruption.

Multiple access protocols

A multiple access protocol is MAC protocol that is used on a network where multiple hosts need to send messages on the same physical link (e.g., the same wire or range of radio frequencies). These types of links are broadcast links since multiple hosts are connected to the same medium. The multiple access problem is that of coordinating transmissions from multiple hosts to avoid collisions. A collision is when two or more hosts transmit at the same time on the same frequency, causing one transmission to interfere with the other, and damaging both signals. There are three strategies for handling this.

1. Channel partitioning

Channel partitioning means dividing a communication channel either into fixed time slots or fixed frequency bands. Time division multiplexing (TDM) divides a channel into time slots. For n hosts, each host gets 1/ n of the time slots. A host can transmit only during its defined time slot. Frequency division multiplexing (FDM) divides a channel into n frequency bands. A host can transmit at any time but can only transmit on its allotted frequency band. As with TDM, this channel partitioning scheme does not make efficient use of the bandwidth. Frequency bands or time slots go wasted if the nodes to which they are allocated have nothing to send.

2. Taking turns

MAC protocols based on taking turns allow each node to have full use of the network and coordinate access to make sure that no other node will be using the network. This ensures that collisions cannot occur.

A polling protocol uses a master that polls each of the nodes on the network, each in turn, to see if one of them wants to transmit data. This ensures that there are no collisions as the master will not poll another node until transmission is complete. However, a node incurs the delay of waiting to be polled (think about a LAN with thousands of nodes on it). Also, if the master dies, the network becomes inoperable.

A token passing protocol uses a special frame, called a token, that is passed around the nodes on the network in sequence. If a node gets a token, then it can transmit data. Once it is done transmitting, it passes the token to its neighbor. If it has nothing to transmit, it passes the token to its neighbor immediately. Unlike a polling protocol, there is no need for a master. However, it suffers from some of the same problems. A node has to wait for the token and, should a node fail to pass the token, no other node will be able to transmit.

3. Random access

With random access protocols, any node has full use of the channel but only one node at a time may use it. There are no scheduled time slots as in TDM. Because there is no schedule on who can transmit when, random access protocols have a chance of collision when multiple nodes decide to transmit at the same time.

In the Slotted ALOHA protocol, access to the network is divided into time slots. Time slots are not assigned to any node. A node can transmit at the start of any time slot and must finish transmission at the end of that slot. If two nodes happen to transmit during the same time slot, a collision results and both nodes will have to retransmit the frame. Each of them retransmits it in the next time slot with some predefined probability p. Imagine the decision is made by a node flipping a weighted coin to decide whether to retransmit in the next time slot. If the flip tells it not to retransmit, it makes the same decision for transmitting on the time slot after that (and so on). Slotted ALOHA introduced the concept of a randomized wait after a collision but it does not use the network very efficiently. For a large number of transmitting nodes, only about 37% of time slots will have useful data. The rest will be empty or have collisions.

Carrier Sense Multiple Access with Collision Detection (CSMA/CD) is a successor to Slotted ALOHA and is the protocol used on Ethernet on a shared link (which was the design of the original Ethernet). CSMA/CD has two parts to it: carrier sensing means that a node will not just transmit when it is ready to do so but will first listen to the communication channel and wait until it is clear (i.e., nobody else is transmitting); collision detection means that a node is listening to the channel at the same time that it is transmitting. If it detects interference (a collision), it immediately stops transmitting.

After a node detects a collision and stops transmitting, it will have to retransmit that frame. Before doing so, it will wait a random interval. The desired heuristic is to pick a random wait time from a long time interval if there is a lot of traffic on the network and to pick a random wait time from a short time interval if there is little traffic on the network. Of course, a network adapter has no idea what is going on in the rest of the network. Instead, each time the transmission of a frame results in a collision, a random backoff value will be chosen from a time interval that is double the previous time interval. This technique is called binary exponential backoff.

Ethernet

Ethernet was designed as a random access packet switched network that uses CSMA/CD over a shared wire. It has been evolving since the the mid–1970 and continues to evolve. Not only did it get faster (from 2.74 Mbps to 10 Gbps and beyond) but it moved from a shared bus topology to a switched star topology, where every node is connected by a dedicated cable to a central switch.

There are slight variations on the format of an Ethernet frame depending on which version of the protocol is used but the key parts of an Ethernet frame are:

- MAC destination address

- MAC source address

- type, indicating the higher level protocol encapsulated within the data field (e.g., an IP datagram). It also identifies the version of the Ethernet protocol used in the frame.

- payload: the data

- CRC checksum

Surprisingly, the frame length is missing in the most common version (Ethernet II) since the end of the frame is recognized by the hardware when the carrier signal drops. The packet driver on the adapter counts bytes as they arrive and stops when the receiver hardware has no more bytes to receive.

Address Resolution Protocol and Neighbor Discovery Protocol

Ethernet addresses are 48-bit addresses that are globally unique. They are generally assigned by the manufacturer of the adapter. These MAC addresses are unrelated to IP addresses. Any IP datagram needs to be encapsulated in an Ethernet frame before it can be transmitted on an Ethernet network. The MAC address in this frame is the address of the next hop for that datagram. The Address Resolution Protocol (ARP) allows a host to find a MAC address that corresponds to a desired IP address.

If a host needs to send a datagram to a system on its subnetwork, it must encapsulate the datagram in an Ethernet frame whose destination address is the MAC address of the recipient. If a host needs to send a datagram to a system that is not on its subnetwork, it needs to look up the destination IP address in its routing table and encapsulate the datagram in an Ethernet frame whose destination address is the MAC address of the first-hop router that is responsible for that IP destination.

ARP maintains a cache of recently-used IP-MAC address mappings in an ARP table. If the IP address it needs is not in the table, then it broadcasts an ARP query that contains the desired IP address. Every adapter on the LAN will receive it (it’s an Ethernet broadcast). If one of the adapters owns that IP address, it responds directly to the sender with an ARP response containing the corresponding MAC address.

IPv6 does not support ARP and uses a similar but more efficient mechanism called the Neighbor Discovery Protocol (NDP). Every IPv6 host must listen to a special multicast IP address that is derived from its own IP address. The multicast Ethernet MAC address is, in turn, derived from that IP multicast address. Hence, a query (called a neighbor solicitation) will be sent as a multicast message and delivered only to those hosts whose IP addresses result to the same Ethernet MAC address. Most likely, there will only be one such address in a LAN. The benefit of this method over ARP is that every host on the LAN is not interrupted with a query.

Multicast addressing

As with IP, Ethernet defines a range of MAC addresses that are reserved for use as multicast addresses. An Ethernet controller may, depending on the hardware, be configured to receive multicast frames in several ways:

- If a hash of a MAC address matches a certain value, accept the frame.

- If a MAC address matches one of several MAC addresses, accept the frame. This is usually limited to a small number of addresses.

- Accept all multicast frames in multicast promiscuous mode.

The link-layer software driver needs to be prepared to receive extraneous frames and discard them if they contain destination addresses that the host is not interested in receiving.

While IP and Ethernet MAC addresses are completely unrelated on a host, multicast addresses are related since there would otherwise be no way of identifying the set of MAC addresses that need to receive a specific IP multicast datagram. Each host needs to define a multicast MAC address that can be derived from the IP address so that each receiver can listen on that address and each transmitter can transmit to that address. Both IPv4 and IPv6 use a similar approach. A number of least-significant bits of the IP multicast address (23 out of 28 bits for IPv4; 32 out of 112 bits for IPv6) are copied onto a defined MAC multicast address. The IP driver will need to discard any extraneous multicast packets that arrive because the lower bits of the multicast address happened to be the same.

Link-layer switches: Switched Ethernet

Ethernet began as a shared network using a bus topology. As coaxial cables gave way to twisted pair wiring (phone wires), adapters on different hosts could no longer tap into the same cable. Ethernet moved to a star topology where a separate cable connected each adapter to a central point: an Ethernet hub. An Ethernet hub simulated a shared Ethernet network by taking every bit that was received on one interface and transmitting it onto every other interface.

Hubs evolved into switches. While switches look similar to hubs, they are more intelligent and improve the performance of Ethernet networks considerably. A switch essentially acts like a link-layer router. It forwards frames received on one interface (connector) to another. Unlike a router, a switch is self-learning. It learns which MAC addresses correspond to which interfaces on the switch by looking at the source address of frames that arrive on each interface. This mapping of MAC addresses to interfaces is stored in a forwarding table (also called a switch table or a MAC table). Because switches may be connected to each other, multiple MAC addresses may map to a single interface on a switch (that interface happens to connect to another switch). If a switch receives a frame with a destination address that is missing from its table, it simply sends a copy of that frame out onto all interfaces.

The biggest benefit from switches is that collisions can no longer occur. A link between a switch and a host is full duplex: frames can be sent concurrently with frames being received. The switch itself will queue and forward frames (this leads to the possibility of buffer overflow, but not collision). Adapters operating in full duplex mode have no need to do collision detection.

Virtual Local Area Networks (VLANs)

Some Ethernet switches can be configured to act as multiple logical, or virtual, switches, thereby creating several distinct local area networks. These networks are called Virtual Local Area Networks (VLANs) and they look and feel like distinct LANs. Each interface on the switch can be assigned, or reassigned, to one of these VLANs. Operations such as broadcasts and link-layer multicasts will remain within a single VLAN.

VLANs can be extended geographically or in capacity by connecting multiple switches together. Instead of running a cable for each VLAN between the switches, a single cable may be used to connect the switches to handle all the traffic that flows on all of the VLANs. This is called VLAN trunking. To identify which VLAN an Ethernet frame belongs to, the Ethernet frame is augmented with a VLAN tag. This tag is removed when the frame is switched to a non-trunk interface.

With VLAN switches, an administrator can define which VLAN any device resides on, regardless of which switch it is connected to. This allows the administrator to segment groups of computers (e.g., accounting vs. development vs. test) without having to connect each group to its own dedicated switch.

Wireless Networks

A base station is a device that sends and receives data to and from wireless hosts. It may also coordinate transmission among hosts, with directives on who can transmit, when, and what signal strength. The base station generally interfaces to other, usually wired, networks thereby connecting the wireless devices with the wider Internet. Examples of base stations are cell towers and wireless access points.

A wireless device, called a station may operate in infrastructure mode, in which case traditional network services such as DHCP, DNS, and routing as well as connectivity to the Internet are expected to be provided through the base station and the wired network to which it connects. Alternatively, hosts may operate in ad hoc mode, also known as peer-to-peer mode. In this case, there is no base station or back-end infrastructure available. Hosts are self-configuring and need to figure out how to communicate among themselves, which includes assigning unique addresses, discovering names, and deducing routes to each other.

802.11

802.11, also known as Wi-Fi, is a set of standards for wireless local area networking. Standards such as 802.11a, 802.11b, 802.11g, 802.11n, and 802.11ac define operating frequencies and data encoding techniques used for sending and receiving frames on a wireless LAN. Most 802.11 systems operate in either the 2.4 or 5 GHz frequency bands.

An 802.11 base station is known as an access point (AP). The collection of an access point and one or more mobile wireless devices (stations) is called a basic service set (BSS). Each BSS has a unique ID, called the BSSID, which happens to be the MAC address of the BSS’s access point. Devices that interact with an access point operate in infrastructure mode. The access point connects to a wired Ethernet infrastructure. 802.11 devices can also operate in ad hoc mode, where devices communicate directly with each other with no need of an access point.

Each access point, in addition to having a BSSID, has a Service Set Identifier (SSID), which is a human-friendly text name that is assigned by the administrator who configures the AP. Each BSS operates over a range of frequencies identified as a channel. Adjacent channels partly overlap with each other. For example, in the U.S., the allowable non-overlapping channels in the 2.4 GHz band are 1, 6, and 11. If adjacent BSSes use these distinct channels, then frames sent by a device in one BSS will never interfere with those in another BSS.

A station (a wireless endpoint device) needs to find and associate itself with an AP. Two techniques are available for this. With passive scanning, the AP periodically sends beacon frames, each containing the AP’s SSID and MAC address (BSSID). The beacon is typically sent every 100 ms. The station scans all channels, searching for beacon frames from any APs in the area.

With active scanning, a station may broadcast a probe request frame. It sends this to a broadcast address (ff:ff:ff:ff:ff:ff) and sets a timer to wait for any answers. If no answer has been received at the end of the time period, the station moves to the next channel and repeats the process. APs that receive the probe will respond with a probe response frame, that contains their identity, supported data rates, and other information. A station then selects an access point with which to associate. This may be done by the user or programmatically (e.g., the AP with the strongest signal or one that has been seen in the past). The station sends an association request frame, receives an association response from the AP and is now part of that AP’s BSS. It can then send a DHCP discovery message to configure itself on the IP network.

802.11 MAC

There are a couple of fundamental differences between 802.11 and Ethernet, apart from one being wireless and the other being wired. Operating in a wired medium results in considerably higher bit-error rates. Also, while an Ethernet transceiver, when operating on a shared medium, can listen while transmitting, an 802.11 transceiver cannot. Because of this, Ethernet was able to perform collision detection and stop transmitting the instant that it detected a collision. 802.11 cannot do so and will transmit a complete frame, even if it gets garbled in the air.

CSMA/CA

To deal with the inability to detect collisions, 802.11 employs a random access MAC protocol called carrier sense multiple access with collision avoidance (CSMA/CA). The key part of CSMA/CA is that, if stations sense a busy channel, they all perform a random backoff and then try again. Collisions are likely to occur if multiple stations wait for a busy channel to become clear and then start to transmit at the same time. To avoid this situation, CSMA/CA tells the stations to wait a random time after they sensed a busy channel became clear, and then transmit the frame. The random time counter counts down only when the channel is sensed to be clear; it pauses whenever a signal is detected on the channel. The idea is that stations pick different random values and when they are ready to try transmitting again, they will not transmit at the same time: somebody will end up going first, causing the channel to be busy for others.

802.11 ARQ

Since 802.11 cannot listen while transmitting to detect a collision and because there is a higher likelihood of data corruption on a wireless network, 802.11 adds an ARQ protocol (acknowledgements and retransmissions). After a node sends a frame, the receiver (AP or station) validates the CRC in the frame and sends back an acknowledgement frame. If the sender does not receive the acknowledgement, it increases its backoff value, counts down a random interval for the channel to be free to transmit, and retransmits the frame. Like CSMA/CD, 802.11’s CSMA/CA uses binary exponential backoff. Because 802.11 uses an ARQ protocol, the 802.11 frame contains a sequence number to allow a receiver to detect duplicate frames. After a certain number of retransmissions, the sender gives up, so reliable delivery is not assured.

Because of the higher likelihood of errors and the time expense of retransmission, 802.11n and 802.11ac systems also support the optional use of an error correcting code (low-density parity check code, LDPC). This is in addition to the CRC error-detection code.

802.11 RTS/CTS

A unique problem in wireless networks is that a station does not necessarily know if a channel is busy or not because it can be out of radio range of another station that is transmitting while both stations are in range of an access point. This is called the hidden node problem. In this case, a station may transmit when it thinks the channel is clear, not realizing that a transmission was already taking place between the AP and another station. This is unavoidable but is ameliorated via the optional use of RTS/CTS (Request to Send / Clear to Send). Before transmitting a frame, a station sends a Request to Send (RTS) message to the AP, asking to reserve the communication channel for a message of a specific size. The AP then responds with a Clear to Send (CTS) message, giving it permission to send the frame. The CTS message is broadcast so that all stations will get the message and know that they should not transmit anything during that interval. RTS and CTS frames are short and sending them reduces the chance of collision compared to sending a long data frame. The RTS/CTS mechanism serves as an extension of carrier sensing. It allows a station to be informed that a channel will be busy even if it may not have the ability to pick up the signal from the station that will be transmitting the data.

802.11 addresses and host migration

The 802.11 frame is conceptually similar to an Ethernet frame. In fact, they share the same MAC addressing scheme so that Ethernet addresses interoperate with 802.11 addresses. The access point serves as a bridge between the two protocols and converts 802.11 frames into Ethernet frames when they need to go on an Ethernet link and vice versa. One distinction between Ethernet and 802.11 frames is that an 802.11 frame has four address fields, three of which are used in infrastructure mode. One address identifies the wireless destination. Another identifies the wireless source. In cases where the frame is routed between the wireless and wired network, the third address identifies the MAC address of the wired device (regardless of whether it is a sender or a receiver). The reason for three addresses is that, unlike an Ethernet switch, an AP has a distinct MAC address and is addressed as an intermediate destination both for frames between wireless stations and for frames that go between and devices on the wired network. If a frame needs to go to the wired network, a station will address it to the AP, which will then create an Ethernet MAC frame that is addressed to the targeted wired destination.

A station is part of a BSS. That means it is associated with a single access point. To extend the range of a wireless LAN, multiple access points may be deployed that share the same SSID. A device can dissociate itself from one AP and associate itself with another one when it detects a stronger signal from the new one. This is called host migration. Since the APs are connected to the same LAN via the same switch (or a set of connected switches), there is no problem with the station keeping the same IP address and any state of TCP session. The challenge is to get the Ethernet switch to immediately route frames to the latest access point that the station has joined. To support host migration at the switch, an access point broadcasts an Ethernet frame that contains the migrated host’s source MAC address. The switch, being self-learning will immediately update its forwarding table, associating the device’s MAC address with that of the interface on which it arrived.

Power management

Most wireless devices are battery powered and power consumption is a key design factor. A transceiver on a wireless device can quickly go to sleep and wake up at a preset interval. Before a device puts its 802.11 transceiver to sleep, it sends a message to the AP stating that it is going to sleep (this is a bit in the MAC header). Upon receiving this message, the AP will queue but not send any frames targeted for that device. The device’s transceiver is programmed to wake up before the AP is scheduled to send its next beacon frame (which the AP does typically every 100ms). When the AP sends a beacon frame, it sends a list of MAC addresses of stations that have buffered frames (queued frames). The station will then wake up and request the frames via a polling message. Otherwise, it can go to sleep until the next beacon.

Quality of Service in Networks

IP was designed as a system that would provide best-effort packet delivery but with no guarantees on the path a packet will take, whether it gets dropped, or what order it arrives in. Hence, there is no concept of delivering any specific grade of service. As IP networks began to be used for carrying continuous media, such as voice and data, several approaches were taken to attempt to provide better controls for scheduling the delivery of IP packets.

Quality of service (QoS) on a network is characterized by four factors:

- Bandwidth (or bit rate): the average number of bits per second over the network

- Delay (or latency): the average time for data to get from one endpoint to another

- Jitter: the variation in the delay

- Errors (or packet loss): the percentage of packets that do not reach their destination or reach it with errors

Quality of service problems arise because we do not have unlimited resources.

- Congestion

- Congestion arises when more packets are flowing into a router than can flow out. It is largely due to bandwidth mismatch, such as having a 10 Gbps network routing traffic to a 1 Gbps link, or to aggregation, such as having four 1 Gbps links all sending traffic that needs to be routed over a single 1 Gbps link. If a router gets packets at a faster rate than it can route, the result will be packet loss. The router may also choose to block the arrival of new packets.

- Latency

- Latency is due to not only the propagation delay of transmitting data on a network but to various delays along the route. The sending host spends time in packetization, the creation of packets that need to be transmitted. Serialization is the queuing of packets are for transmission onto the network since they can only go out one at a time. Each router incurs a processing delay due to inspecting the packet and moving it onto the appropriate output queue. Then there is the queuing delay of waiting for the router to schedule the packet for transmission. Once it reaches the destination, there is the processing delay of depacketizing the packet and moving it through the network stack, delivering the data to the application, and scheduling the process to consume it.

- Jitter

- If multiple packets arrive to a router or switch at approximately the same time but need to be transmitted over a single link, they need to be queued since only one can be sent at a time. Some packet will be first and some packet will be last. This variation in dequeuing time creates jitter. Jitter is also caused by having some packets take a different route than others and, most significantly, by the retransmission of lost packets.

- Packet loss

- Packets can be corrupt or lost due to collision, interference, and signal degradation in the network. The most likely cause, however, is that of a router simply dropping packets because a queue is full. This condition is known as buffer overrun.

Achieving quality of service

Quality of service on a network is achieved via resource allocation and packet prioritization Network service quality can be strictly enforced or may be attempted via a best-effort approach. Hard QoS refers to a system that is designed to provide a guaranteed level of service via reservations while soft QoS refers to a system that uses a best-effort approach to try to deliver, but not guarantee, the desired level of service.

Two broad approaches to providing data channels with managed quality of service on a network are admission control and traffic control. Admission control is generally designed to enforce hard QoS. With admission control, we ask that applications first request a particular quality of service from the network. The network (i.e., all the routers in the path from the source to the destination) will reserve resources for switching and routing the packets to conform to that grade of service and grant the request to the application. If any router cannot commit to the needed resources, the request will be denied.

Traffic control provides soft QoS. Soft QoS on a network refers to prioritization of packets without any reservation of resources from routers or endpoints or any a priori negotiation for a level of service. With traffic control, applications may send data onto the network freely but the network elements (routers) will classify, queue, schedule, and sometimes drop packets based on various criteria.

A link scheduling discipline defines how packets are scheduled at the output queue. When we looked at router architecture, we considered only a single queue per output interface with packets transmitted in a first-in-first-out (FIFO) manner. This is the simplest approach but does not offer any opportunity for differentiating one datagram from another based on its service class.

A router can set up multiple queues for an output link and place different classes (e.g., based on addresses, ports, or other fields in the IP header) of packets onto different queues. A packet scheduler then picks the next packet from a specific queue for transmission. A round-robin scheduler will give each class of packet (queue) equal priority. A priority scheduler will always service high-priority queues first but can lead to starvation of low-priority queues: low-priority queues might never get serviced. A desirable characteristic of queue management is traffic isolation - to ensure that one class of service cannot adversely affect another class even if it has a higher A weighted fair queuing (WFQ) approach gives each queue a priority level but also a ensures a certain minimum percentage of the available link bandwidth so that starvation does not occur.

Traffic shaping and traffic policing

Traffic shaping is when a router queues packets in certain flows during peak usage for later retransmission when there is available bandwidth. With traffic policing, traffic that exceeds the allocated bandwidth for a particular flow is discarded.

A leaky bucket is a traffic shaping algorithm that coverts a bursty flow into a constant bitrate flow: it removes jitter. The bucket is represented by a queue that receives data at an irregular rate (in bursts). The queue is emptied at a constant rate (the hole at the bottom of the bucket), resulting in an absence of jitter. If there is nothing left to read in the queue, we have a buffer underrun and jitter occurs. If the queue is already full when data arrives, we have a buffer overrun and packet loss occurs.

A token bucket is a “bucket” that holds tokens that are generated at a constant rate. In order to transmit a packet, the bucket must be drained of the number of tokens that is proportionate to the packet’s size. The token bucket algorithm does not smooth out traffic like the leaky bucket does but ensures that an average bitrate is enforced for a specific flow. For example, a buildup of tokens can result in a burst of data.

DiffServ

Differentiated services (DiffServ) is a way for programmers to provide advisory information inside an IP header on how a packet should be processed by routers. A packet can be assigned a specific service code by setting a value in the 6-bit Differentiated Services Codepoint (DSCP) field of the IP header. DiffServ is an example of traffic classification. It is entirely up to the routers to decide how to process this information (e.g., via WFQ), what the service levels mean, or even whether to process it at all. Differentiated services are an example of soft QoS: there is no guarantee on the actual quality of service that will be delivered. A common use for DiffServ is to try to provide a better quality of service for voice over IP (VoIP) phone calls by tagging those packets for Expedited Forwarding (EF) service, which is defined to have the characteristics of low delay, low loss, and low jitter. Whether DiffServ values are used at all and how they are interpreted is strictly up to the ISP.

IntServ

Integrated Services (IntServ) is an approach that relies on end-to-end reservation of services. A transmitting host specifies its traffic (as a token bucket with a given rate and size) and requested level of service guarantee. Integrated Services system relies on the Reservation protocol, RSVP, which has been developed to allow a flow of packets to be routed with bitrate guarantees. RSVP is an example of a soft state protocol, meaning that state is not maintained perpetually but reservations expire unless they are refreshed periodically.

The problem with guaranteeing this is that all routers in the path from the source to the destination must be configured to support RSVP: each intermediate router must commit to reserving the needed amount of routing resources to guarantee the desired level of service. Integrated Services is an example of admission control while differentiated services is an example of traffic control. If one ISP in the path does not support this then all bets are off.

RTP and RTCP

The Real-Time Protocol (RTP) is an application-level protocol on top of UDP. It does not define any mechanisms for data delivery or QoS control. As with any UDP datagrams, neither in-order delivery nor delivery in general is assured. What RTP does is allow a sender to attach timestamps to packets so that a receiver can play them back at the same rate at which they were sent.

An RTP header contains: - payload type: identifies type of video or audio encoding. An application can change the encoding type mid-stream (e.g., to switch to a lower bandwidth codec, for example) - sequence number: app can detect missing packets & conceal data loss - timestamp: app can play back data at appropriate intervals - source ID of stream: uniquely identifies stream to allow demultiplexing multiple streams that may received at the same port.

RTP is widely used for voice and video, particularly for media transport in SIP (Session Initiation Protocol) systems.

The RTP Control Protocol (RTCP) is a companion protocol to RTP and is used to provide feedback from the receiver to the sender. By comparing the arrival time between packets with the difference in timestamps in the RTP header, a receiver can compute jitter. By checking sequence numbers, a receiver can compute packet loss. The receiver can periodically send this feedback through RTCP so that the sender can make adjustments to the data stream (for example, change to a lower bandwidth codec).

Firewalls

A firewall protects the junction between an untrusted network (e.g., external Internet) and a trusted network (e.g., internal network). Two approaches to firewalling are packet filtering and proxies. A packet filter, or screening router, determines not only the route of a packet but whether the packet should be dropped based on contents in the IP header, TCP/UDP header, and the interface on which the packet arrived. It is usually implemented inside a border router, also known as the gateway router that manages the flow of traffic between the ISP and internal network.

The packet filter evaluates a set of rules to determine whether to drop or accept a packet. This set of rules forms an access control list, often called a chain. Strong security follows a default deny model, where packets are dropped unless some rule in the chain specifically permits them. With stateless inspection, a packet is examined on its own with no context based on previously-seen packets. Stateful inspection allows the router to keep track of TCP connections and understand the relationship between packets. For example, a port that needs to be enabled for the FTP data channel once an FTP connection has been established or that return packets should be allowed into the network in response to outbound requests.

Packet filters traditionally do no look above the transport layer. Deep packet inspection (DPI) allows a firewall to examine application data as well and make decisions based on its contents. Deep packet inspection can validate the protocol of an application as well as check for malicious content such as malformed URLs or other security attacks.

An application proxy is software that presents the same protocol to the outside network as the application for which it is a proxy. For example, a mail server proxy will listen on port 25 and understand SMTP, the Simple Mail Transfer Protocol. The primary job of the proxy is to validate the application protocol and thus guard against protocol attacks (extra commands, bad arguments) that may exploit bugs in the service. Valid requests are then regenerated by the proxy to the real application that is running on another server and is not accessible from the outside network. The proxy is the only one that can communicate with the internal network. Unlike DPI, a proxy may modify the data stream, such as stripping headers or modifying machine names. It may also restructure the commands in the protocol used to communicate with the actual servers (that is, it does not have to relay everything that it receives).

A typical firewalled environment is a screened subnet architecture, with a separate subnet for systems that run externally-accessible services (such as web servers and mail servers) and another one for internal systems that do not offer services and should not be accessed from the outside. The subnet that contains externally-accessible services is called the DMZ (demilitarized zone). The DMZ contains all the hosts that may be offering services to the external network (usually the Internet). Machines on the internal network are not accessible from the Internet. All machines within an organization will be either in the DMZ or in the internal network.

Both subnets will be protected by screening routers. They will ensure that no packet from the outside network is permitted into the inside network. Logically, we can view our setup as containing two screening routers:

The exterior router allows IP packets only to the machines/ports in the DMZ that are offering valid services. It would also reject any packets that are masqueraded to appear to come from the internal network.

The interior router allows packets to only come from designated machines in the DMZ that need to access services in the internal network. Any packets not targeting the appropriate services in the internal network will be rejected. Both routers will generally allow traffic to flow from the internal network to the Internet, although an organization may block certain services (ports) or force users to use a proxy (for web access, for example).

Note that the two screening routers may be easily replaced with a single router since filtering rules can specify interfaces. Each rule can thus state whether an interface is the DMZ, internal network, or Internet (ISP).

A variation on screening routers is the use of intrusion detection systems (IDS). A screening router simply makes decisions based on packet headers. Intrusion detection systems try to identify malicious behavior. There are three forms of IDS:

A protocol-based IDS validates specific network protocols for conformance. For example, it can implement a state machine to ensure that messages are sent in the proper sequence, that only valid commands are sent, and that replies match requests.

A signature-based IDS is similar to a PC-based virus checker. It scans the bits of application data in incoming packets to try to discern if there is evidence of “bad data”, which may include malformed URLs, extra-long strings that may trigger buffer overflows, or bit patterns that match known viruses.

An anomaly-based IDS looks for statistical aberrations in network activity. Instead of having predefined patterns, normal behavior is first measured and used as a baseline. An unexpected use of certain protocols, ports, or even amount of data sent to a specific service may trigger a warning.

Secure communication and authentication

Cryptography

Cryptography deals with encrypting plaintext using a cipher, also known as an encryption algorithm, to create ciphertext, which is unintelligible to anyone unless they can decrypt the message.

A symmetric encryption algorithm uses the same secret key for encryption and decryption.

A public key algorithm uses a pair of keys: data encrypted with the first key can be decrypted only with the second key and vice versa. One of these keys is kept private (known only to the creator) and is known as the private key. The corresponding key is generally made visible to others and is known as the public key. Anything encrypted with the private key can only be decrypted with the public key. This is the basis for digital signatures because the encryption can only be performed by the key’s owner. Anything that is encrypted with a public key can be encrypted only with the corresponding private key. This is the basis for authentication and covert communication because decryption can only be performed by the owner, who is the only one who has the private key.

When data is transmitted, it is broken into blocks and each block is encrypted separately. This leads to two problems. If different communication sessions contain the same messages and use the same key, an intruder can see that the same data is being sent. Secondly, a malicious party can add or delete, add, or replace blocks (perhaps with random junk or perhaps with blocks that were captured from previous communication sessions). Cipher block chaining (CBC) addresses these problems. Every block of data is still encrypted with the same key. However, prior to being encrypted, the data block is exclusive-ored with the previous encrypted block. The receiver does the process in reverse: a block of received data is decrypted and then exclusive-ored with the previously-received block to obtain the original data. The very first block is exclusive-ored with a random initialization vector, which must be transmitted to the remote side. Note that CBC does not make the encryption more secure; it simply makes the result of each block of data dependent on the previous block so that data cannot be inserted or deleted in the message stream.

A cryptographic hash function is a one-way function whose output is always a fixed number of bits for any input. By one-way, we mean that there is no way to compute the input when given the output. For good cryptographic hash functions (e.g., SHA–1, SHA–2, SHA–3), it is highly unlikely that two messages will ever hash to the same value, it is extremely difficult to construct text that hashes to a specific value, and it is extremely difficult to modify the plaintext without changing its resultant hash. The hash function is the basis for message authentication codes and digital signatures. Note that when we talk about cryptography and mention phrases such as “extremely difficult”, we mean “impossible for all practical purposes,” not that “you can do it if you spend an entire week working on the problem.”

Secure communication

To communicate securely using a symmetric cipher, both parties need to have a shared secret key. Alice will encode a message to Bob using the key and Bob will use the same key to decode the message. If Alice wants to communicate with Charles, she and Charles will also need a secret key. The fact that every pair of entities will need a secret key leads to a phenomenon known as key explosion. Overall, in a system with n users, there will be O(n2) keys.

The biggest problem with symmetric cryptography is dealing with key distribution: how can Alice and Bob establish a key so they can communicate securely? The Diffie-Hellman key exchange algorithm allows one to do this. Each party will generate a private key and a public key (these are not encryption keys; they are just numbers — Diffie-Hellman does not implement public key cryptography — it is unfortunate that the term was used to describe these numbers). Alice can use her private key and Bob’s public key to compute a common key. Bob can compute the same common key by using his private key and Alice’s public key. They can then communicate securely by using the common key with a symmetric cipher.

Using true public key cryptography, such as RSA, if Alice encrypts a message with Bob’s public key, Bob will be the only one who can decrypt it since doing so will require Bob’s private key. Likewise, Bob can encrypt messages with Alice’s public key, knowing that only Alice will be able to decrypt them with her private key.

Session keys and hybrid cryptosystems

A session key is a random key that is generated for encrypting data for one communication session. It is useful because if the key is ever compromised, no lasting information is obtained: future communication sessions will use different keys. A hybrid cryptosystem uses public key cryptography to send a session key securely. The originator generates a random session key and encrypts it with the recipient’s public key. The recipient decrypts the message with the corresponding private key to extract the session key. After that, symmetric cryptography is used for communication, with messages encrypted with the session key. This has the advantages of higher performance (public key cryptography is much, much slower than symmetric cryptography), ease of communicating with multiple parties (just encrypt the session key with the public keys of each of the recipients), and allows the bulk of data to be encrypted with session keys instead of the hardly-ever-changing public keys.

Message authentication

Message Authentication Code (MAC) is a cryptographic hash of a message that is encrypted with a shared symmetric key. This MAC is sent along with the message. If an intruder modifies the message, the receiver will be able to validate that the message no longer matches the MAC: simply hash the message and compare it with the value of the decrypted MAC. An intruder cannot generate a new MAC because you need the secret key to do so.

A digital signature is similar to a MAC but uses public key cryptography. It is simply the hash of a message encrypted with the creator’s private key. Anyone who has the message signer’s public key can decrypt the hash and thus validate it against the message. Other parties, however, cannot recreate the signature. Even though they can generate the same hash for the message, they do not have the signer’s private key to encrypt that hash.

Public key authentication

As we saw with hybrid cryptosystems, public key cryptography makes key exchange simple. It also simplifies authentication. If Alice wants to authenticate herself to Bob (prove that she’s really Alice), Bob will generate a random bunch of bits, called a nonce, and ask Alice to encrypt the nonce with her private key (something only she can do). She will send the result back to Bob who will decrypt the data with Alice’s public key. If the result matches Bob’s original nonce, he is convinced that Alice has Alice’s private key and therefore is really Alice.

Digital certificates

For Bob to validate Alice in the above example, Bob must be confident that he really has Alice’s public key rather than someone else’s. X.509 digital certificates provide a way to do associate an identity with a public key and have some entity vouch for that association. A certificate is a data structure that contains user information and the user’s public key. This data structure also contains a signature of the certification authority (CA). The signature is created by taking a hash of the rest of the data in the structure and encrypting it with the private key of the CA. The CA is responsible for setting policies of how they validate the identity of the person who presents the public key for encapsulation in a certificate.

Transport Layer Security (Secure Sockets Layer)

Secure Sockets Layer (SSL, also known as TLS — Transport Layer Security) is a layer of software above TCP at the application layer designed to provide authentication and secure communication while giving the programmer the feel of a normal sockets interface. It makes it easy to add a secure transport onto insecure TCP socket-based protocols (e.g., HTTP and FTP). SSL uses a hybrid cryptosystem and relies on public keys for authentication. If both the sender and receiver have X.509 digital certificates, SSL can validate them and use nonce-based public key authentication to validate that each party has the corresponding private key. In some cases, it may validate the server only. If the server does not have a certificate, SSL will then use a public key simply to allow a symmetric session key to be passed securely from client to server. The client generates a session key and encrypts it with the server’s public key. This ensures that only the server will be able to decode the message and get the session key. After that, communication takes place using a symmetric algorithm and the client-generated session key. Each encrypted message that is sent contains a MAC (message authentication code) to allow the receiver to ensure that the message has not bee accidentally or maliciously modified.

VPNs

Virtual private networks (VPNs) allow disconnected local area networks to communicate securely over the public Internet, saving money by using a shared public network (Internet) instead of leased lines. This is achieved by tunneling, the encapsulation of an IP datagram within another datagram. In this case, a datagram that is destined for a remote subnet, which will often have local source and destination IP addresses that may not be routable over the public Internet, will be treated as payload and be placed inside a datagram that is routed over the public Internet. The source and destination addresses of this outer datagram are the VPN endpoints at both sides, usually the VPN-aware routers.

When the VPN endpoint (router) receives this encapsulated datagram, it extracts the data, which is a full IP datagram, and routes it on the local area network. This tunneling behavior gives us the virtual network part of the VPN.

To achieve security (the “private” part of VPN), an administrator setting up a VPN will usually be concerned that the data contents are not readable and the data has not been modified. To ensure this, the encapsulated packets can be encrypted and signed. Signing a packet enables the receiver to validate that the data has not been modified in transit. Encrypting ensures that intruders would not be able to make sense of the data, which is the encapsulated datagram.

VPNs usually provide several options for key management: shared private keys (AES or 3DES), Diffie-Hellman key exchange, or RSA public keys. IPsec is one of the most popular types of VPNs and has two variations. Authentication Header (AH) mode adds a signature to the encapsulated datagram but does not encrypt any data. Data is readable but cannot be modified. Authentication Header mode is rarely used since the overhead of encrypting data is quite low. The other variant is the Encapsulating Security Payload (ESP) mode, which adds a signature as with AH but also encrypts the entire datagram.

In an environment where an individual computer outside a trusted network needs to connect to another node securely, tunneling will not work since there is no gateway router that will extract an embedded datagram and no trusted local area network on which to route it. To support this environment, VPNs can operate in transport mode instead of tunnel mode. No tunneling takes place and hence there is no encapsulation of the full IP datagram. The IP payload (which will include TCP or UDP headers) is encrypted and signed but the original IP header is left unchanged.