Content Delivery

Content Delivery Networks - Akamai

Paul Krzyzanowski

November 14, 2021

Goal: Provide a highly-scalable infrastructure for caching and serving content to a large number of users with low latency.

Introduction

A challenge in delivering Internet services has been to be able to scale to handle large volumes of user requests and to keep the service highly available. Various approaches have been used to accomplish this.

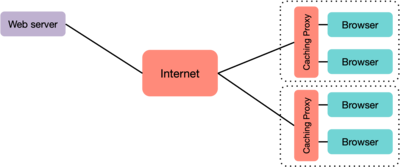

- Proxy servers

- Organizations can pass web requests to caching proxies set up inside their network. Clients contact the proxy for a copy of the content. If it does not have it, then it makes a request to the server that hosts it. After that, users who need the content can get it directly – and quickly –from the proxy server. This can help a small set of users but you’re out of luck if you are not in that organization.

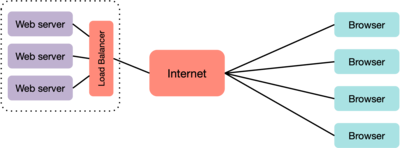

- Clustering within a datacenter with a load balancer

- Multiple servers within a datacenter can be load-balanced. Incoming requests will be distributed among a set of replicated servers. However, they all fail if the datacenter loses power or internet connectivity.

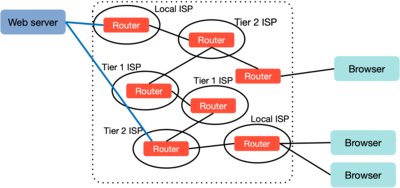

- Multihoming

- Machines can be connected with links to multiple networks served by multiple ISPs to guard against ISP failure. However, protocols that drive dynamic routing of IP packets (BGP) are often not quick enough to find new routes, resulting in service disruption.

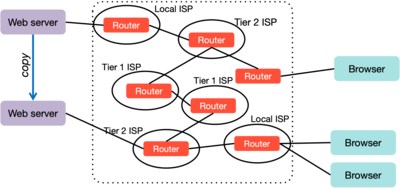

- Mirroring at multiple sites

- The data can be served at multiple sites, with each machine’s content synchronized with the others. However, synchronization can be difficult.

All these solutions require additional capital costs. You are building the capability to handle excess capacity and improved availability even if the traffic never comes and the faults never happen. On top of that, this infrastructure will need to be continuously managed and updated.

Content Delivery Networks

A content delivery network (CDN) is a service deployed across a large set of geographically distributed servers that caches content so that users can access cached copies of the content instead of getting it from the original server (called the origin server). These servers are placed at various points at the edges of the Internet at various ISPs so they can be located close to the users who access that content.

By serving content that is replicated on a collection of servers, traffic from the main (master) server is reduced. Because some of the caching servers are likely to be closer to the requesting users, network latency is reduced. Because there are multiple servers, traffic is distributed among them. Since all of the servers are unlikely to be down at the same time, availability is increased. Hence, a CDN can provide highly increased performance, scalability, and availability for content.

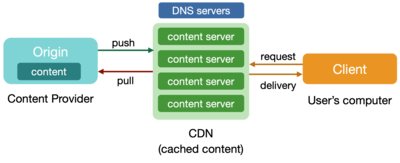

Pushing and pulling

A content delivery network is a collection of servers that cache content. In most cases, the same content will be cached on many servers. The original version of the content is located at the origin, the servers that the organization that uses the CDN operates.

Content may get onto CDNs in two ways and some CDNs will offer both mechanisms.

Pushing content means that the origin – the server with the original content – must manually store content onto the CDN’s delivery nodes – its servers. This approach works well when one can anticipate a surge of content requests. For example, a new operating system update may be released and the vendor may push that content to the CDN so that it can distribute this content throughout its servers ahead of time so that client requests can be satisfied efficiently without touching the origin server. Netflix predicts which shows will be popular when and where and distributes copies of shows across its own CDN, Open Connect, to avoid congestion during peak viewing hours.

Pulling content means that the CDN’s delivery nodes will contact the origin to request content if it is not cached at the CDN. This is the more common way that a CDN operates and the way we normally think about caching: a user contacts a caching service and if the content is not present, the service will get it and cache it in case it is needed again.

Akamai CDN

We will look at some parts of a CDN that’s operated by Akamai. Akamai was the first content delivery network provider and is still the largest. The company was born from research at MIT that focused on “inventing a better way to deliver Internet content.” A key issue was the flash crowd problem: what if your web site becomes really popular all of a sudden? Chances are, your servers and/or ISP will be saturated and a vast number of people will not be able to access your content. This became known as the slashdot effect.

In 2021, Akamai ran on approximately 325,000 servers in around 1,400 networks distributed across approximately 135 countries. Akamai’s traffic reaches volumes of over 30 terabits per second. At the start of the COVID-19 pandemic in 2020, Akamai saw a year’s worth of capacity growth in just a few weeks.

Akamai’s features expanded and evolved over time, as have those of other CDNs. This discussion will not cover Akamai’s full suite of offerings – or those of any other CDN. Instead, we will look at some core mechanisms as an example of how a CDN can be architected. These mechanisms are shared by CDNs from other companies as well.

CDN components

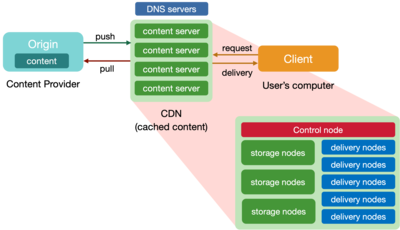

A basic CDN will contain three types of components:

- Control nodes. These track the performance and availability of the various servers and networks. They provide data that will be used by the CDN’s DNS servers so it can balance server load by choosing which IP addresses to return for queries and so it can attempt to identify the best server for each client request. Control nodes can also collect overall statistics for billing and analytics.

- Storage nodes are the heart of the CDN. They are the servers that store copies of the content.

- Delivery nodes accept client connections and manage sessions. They are tightly coupled with storage servers (and may live on the same hardware) get data.

Dynamic DNS

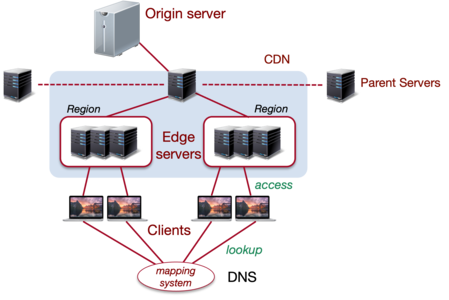

The goal of a CDN is to serve clients from nearest, available servers that are likely to have requested content. According to the company’s statistics, 85% percent of the world’s Internet users are within a single network hop of an Akamai CDN server.

To access a web site, the user’s computer first looks up a domain name via DNS. A mapping system collects information about the state of the Akamai network and locates the caching server that can serve the content. Akamai deploys custom dynamic DNS servers and customers who use Akamai’s services configure their DNS server with an alias (CNAME entry) that points to an Akamai domain name that has the company’s domain encoded within it. For example, the DNS query for www.example.com may respond with a CNAME (alias) of www.example.com.edgesuite.net. The edgesuite.com domain is an Akamai domain and the client will contact an Akamai-managed DNS server to look that up.

Akamai’s dynamic DNS servers use the requestor’s IP address to find the nearest edge server that is likely to hold the cached content for the requested site.

When an Akamai DNS server gets a request to resolve a host name, it chooses the IP address to return based on:

- domain name being requested

- server health

- server load

- user location

- network status

- load balancing

Akamai monitors the performance of their edge servers and can perform load shedding on specific content servers; if servers get too loaded, the DNS server will not respond with those addresses.

Content acquisition

Now that the client has an IP address of an edge content server, it sends a request for content. That edge server may already have the content and be able to serve it directly. Otherwise, the edge server needs to get the content. Edge servers are organized into regions; a set of servers in the same data center at an ISP are know about each other and share the region. A server that needs content will first send a broadcast request to its peers within the same region. If they have the content, they can send it quickly. This is a low-overhead request with a short timeout.

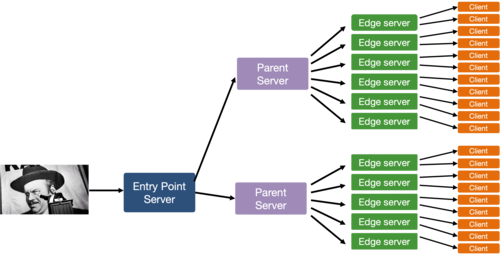

Akamai organizes its servers into a tiered hierarchy. If the edge server cannot find the content in its region, it will send a request to its parent server. If the parent does not have the content, it will broadcast a request to a group of other parent servers. If the content is not found within the CDN, the parent will need to contact the origin server (the server at the company that hosts the content) via its transport system.

Transport system

To get the content from the origin efficiently, Akamai manages an overlay network: the collection of its thousands of servers and statistics about their availability and connectivity. Akamai generates its own map of overall IP network topology based on BGP (Border Gateway Protocol) and traceroute data from various points on the network as well as sending probe messages between various Akamai content servers and origin servers.

Content servers report their load along with bandwidth and latency measurements to a monitoring application. The monitoring application publishes load reports to a local Akamai DNS server, which then determines which IP addresses to return when resolving names.

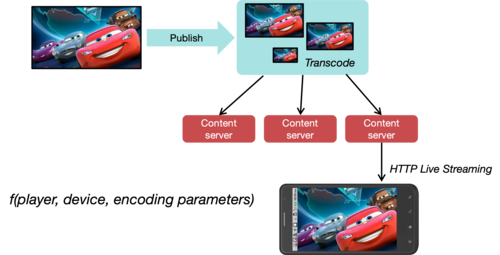

Video

Video is the most bandwidth-intensive service on the Internet and has been a huge source of growth for CDNs. As an example, Netflix operates a global CDN called OpenConnect, which contains up to 80 percent of its entire media catalog. Stored (e.g., on-demand) video is, in some ways, just like any other content that can be distributed and cached. The difference is that, for use cases that require video streaming rather than downloading (i.e., video on demand services), the video stream may be reprocessed, or transcoded, to lower bitrates to support smaller devices or lower-bandwidth connections.

Today, HTTP Live Streaming, or HLS, is the most popular protocol for streaming video. It allows the use of standard HTTP to deliver content and uses a technique called Adaptive Bitrate Coding (ABR) to deliver video. ABR support playback on various devices in different formats & bitrates. The CDN takes on the responsibility of taking the video stream and converting it into to a sequence of chunks. Each chunk represents between two and ten seconds of video. The CDN then encodes each chunk at various bitrates, with can affect the quality & resolution of that chunk of video. For content delivery, the HLS protocol uses feedback from the user’s client to select the optimal chunk. It revises this constantly throughout playback since network conditions can change.

Live video cannot be cached but CDNs offer huge benefits in distributing video. They operate many servers on the edge of the network that span multiple ISPs and geographies. A video stream is sent from the origin to an entry-point server within the CDN network. The entry-point server then forwards this stream to multiple edge servers. For efficiency, this can be done via a hierarchy of servers. The edge servers then make the content available to users. In many ways, this behavior is similar to IP multicast without the need for the various underlying networks to support UP multicast. CDNs can also help with progressive downloads, which is the case where the user can start watching content while it is still being downloaded.

CDN benefits

A CDN, serving as a caching overlay, provides three distinct benefits:

Caching: static content can be served from caches, thus reducing the load on origin servers. A CDN will try to distribute content to its servers that are closest to the users that request the content. These will usually be servers that are on the same ISP and geographic region as the user requesting the content. Note that CDNs cache content, and not logic. If the content is generated dynamically from database lookups, it does not make sense to cache it.

Web pages with dynamically-generated content, however, often contain many components that can be cached. This includes CSS files, fonts, and embedded images and videos.

Routing: by measuring latency, packet loss, and bandwidth throughout its collection of servers, The CDN can find the best route to an origin server, even if that requires forwarding the request through several of its servers instead of relying on IP routers to make the decision. These servers keep open TCP connections to avoid the overhead of setting up new connections for each request.

Security: Because all requests go to the CDN, which can handle a high capacity of requests, it absorbs any Distributed Denial-of-Service attacks (DDoS) rather than overwhelming the origin server. Moreover, any penetration attacks target the machines in the CDN rather than the origin servers. A company (or a group within a company) that runs the CDN service is singularly focused on the IT infrastructure of the service and is likely to have the expertise to ensure that systems are properly maintained, updated, and are running the best security software. These aspects may be neglected by companies that need to focus on other services.

Analytics: CDNs monitor virtually every aspect of their operation, providing their customers with detailed reports on the quality of service, network latency, and information on where the clients are located.

Cost: CDNs cost money, which is a disadvantage. However, CDNs are an elastic service, which is one that can adapt to changing workloads by using more or fewer computing and storage resources. CDNs provide the ability to scale instantly to surges of demand practically anywhere in the world. They absorb all the traffic so the service providers don’t have to scale up: they pay for what they use.

References

Akamai, Content Delivery Networks — What is a CDN?, Akamai.com, 2021.

Akamai, Onboard & Configuration Assistant, Akamai.com

Akamai, Edge Side Includes, Akamai

Francesco Altomare, Content Delivery Network Explained, GlobalDots Blog, April 4, 2021.

CDN Architecture, KeyCDN, October 4, 2018.

Edge Side Includes, Wikipedia

HTTP Live Streaming, Wikipedia

Catie Keck, A Look Under the Hood of the Most Successful Streaming Service on the Planet, The Verge, November 17, 2021.

Erik Nygren, Ramesh Sitaraman, Jennifer Sun, The Akamai Network: A Platform for High-Performance Internet Applications, ACM SIGOPS Operating Systems Review, Rev. 44, 3 (July 2010), p. 2–19. https://doi.org/10.1145/1842733.1842736

George Pallis and Athena Vakali, [Insight and Perspectives for Content Delivery Networks](https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.74.4021&rep=rep1&type=pdf], Communications of the ACM, Vol. 49, No. 1, pp 101–106, January 2006.

Website speed benefits, Dartspeed.com