Final Exam Study Guide

The three-hour study guide for the final exam

Paul Krzyzanowski

May 2025

Last update: Tue May 13 14:17:22 EDT 2025

Introduction

Computer security is about keeping computers, their programs, and the data they manage “safe.” Specifically, this means safeguarding three areas: confidentiality, integrity, and availability. These three are known as the CIA Triad (no relation to the Central Intelligence Agency).

- Confidentiality

- Confidentiality ensures that information is protected from unauthorized access, allowing only authorized users to view or modify it. Privacy gives individuals control over their personal data, focusing on how it is collected and shared. Privacy is a reason for confidentiality. Someone being able to access a protected file containing your medical records without proper access rights is a violation of confidentiality. Anonymity hides a person’s identity, even if their actions are visible. Secrecy involves the deliberate concealment of information for security or strategic reasons.

- A data breach occurs when unauthorized individuals access sensitive data due to hacking, malware, or poor security controls. This can expose personal, financial, or corporate information, leading to identity theft or financial loss. Data exfiltration is the unauthorized transfer of stolen data from a system, often as part of a breach. Attackers use malware, phishing, or compromised credentials to extract information for fraud or sale.

- Integrity

Integrity refers to the trustworthiness of a system. This means that everything is as you expect it to be: users are not imposters and processes are running correctly.

Data integrity means that the data in a system has not been corrupted.

Origin integrity means that the person or system sending a message or creating a file truly is that person and not an imposter. Authentication techniques can address this issue.

Recipient integrity means that the person or system receiving a message truly is that person and not an imposter.

System integrity means that the entire computing system is working properly; that it has not been damaged or subverted. Processes are running the way they are supposed to.

- Maintaining integrity means not just defending against intruders that want to modify a program or masquerade as others but also protecting the system against against accidental damage, such as from user or programmer errors.

- Availability

- Availability means that the system is available for use and performs properly. A denial of service (DoS) attack may not steal data or damage any files but may cause a system to become unresponsive.

Security is difficult. Software is incredibly complex. Large systems may comprise tens or hundreds of millions of lines of code. Systems as a whole are also complex. We may have a mix of cloud and local resources, third-party libraries, and multiple administrators. If security was easy, we would not have massive security breaches year after year. Microsoft wouldn’t have monthly security updates. There are no magic solutions … but there is a lot that can be done to mitigate the risk of attacks and their resultant damage.

We saw that computer security addressed three areas of concern. The design of security systems also has three goals.

- Prevention

- Prevention means preventing attackers from violating established security policies. It means that we can implement mechanisms into our hardware, operating systems, and application software that users cannot override – either maliciously or accidentally. Examples of prevention include enforcing access control rules for files and authenticating users with passwords.

- Detection

- Detection detects and reports security attacks. It is particularly important when prevention mechanisms fail. It is useful because it can identify weaknesses with certain prevention mechanisms. Even if prevention mechanisms are successful, detection mechanisms are useful to let you know that attempted attacks are taking place. An example of detection is notifying an administrator that a new user has been added to the system. Another example is being notified that there have been several consecutive unsuccessful attempts to log in.

- Recovery

- If a system is compromised, we need to stop the attack and repair any damage to ensure that the system can continue to run correctly and the integrity of data is preserved. Recovery includes forensics, the study of identifying what happened and what was damaged so we can fix it. An example of recovery is restoration from backups.

Security engineering involves implementing mechanisms and defining policies to protect a system’s components. Like other engineering disciplines, it requires trade-offs between security and usability. The most secure system would be completely isolated, housed in a shielded room with restricted access, and running fully audited software—but such a setup is impractical for everyday computing. Users need connectivity, mobility, and interaction with the world, which introduces risks. Even in a highly secure environment, concerns remain: monitoring access, verifying software integrity, and preventing insider threats or coercion. Effective security design requires understanding potential attackers and their threats, balancing protection with functionality.

Risk analysis evaluates the likelihood and impact of an attack, identifying who may be affected and the worst possible consequences. A threat model visually maps data flows, highlighting points where information enters, exits, or moves between subsystems. This helps prioritize security efforts by identifying the most vulnerable areas in a system.

Secure systems consist of policies and mechanisms working together to enforce security. A policy defines what is or isn’t allowed, such as requiring users to log in with a password. Mechanisms are the technical implementations that enforce these policies. For example, a login system that prompts for credentials, verifies them against stored records, and grants access only if authentication succeeds ensures that the policy is followed. Effective security requires both well-defined policies and robust mechanisms to enforce them.

Key Cybersecurity Concepts

Understanding how attacks occur requires familiarity with key terms that describe weaknesses, threats, and attack methods. The following definitions explain fundamental concepts related to system security and cyber threats.

Vulnerability

A vulnerability is a weakness in a system, software, or network that can be exploited by an attacker. Vulnerabilities can arise from software bugs, misconfigurations, or weak security practices.

Example: An outdated web server with an unpatched security flaw that allows unauthorized access.

Exploit

An exploit is a technique, tool, or piece of code designed to take advantage of a vulnerability. Exploits can be automated scripts, malware, or sophisticated attack methods used to gain unauthorized access or control over a system.

Example: A hacker uses a known buffer overflow exploit to crash a system and execute malicious code.

Attack

An attack is a deliberate attempt to compromise a system’s security, often with the goal of stealing data, disrupting services, or gaining unauthorized control. Attacks can be carried out manually by skilled hackers or through automated tools.

Example: A phishing attack that tricks users into revealing their login credentials.

Attack Vector

An attack vector is the method or pathway an attacker uses to deliver an exploit and gain access to a system. Attack vectors can be technical (such as software vulnerabilities) or social (such as phishing).

Example: A malicious email attachment that installs malware when opened.

Attack Surface

An attack surface represents the total number of possible entry points that an attacker can target. A larger attack surface increases the risk of security breaches, as more vulnerabilities may be exposed.

Example: A company’s attack surface includes its public website, employee email accounts, remote access systems, and IoT devices.

Threat

A threat is any potential danger that could exploit a vulnerability to cause harm. Threats can originate from malicious actors, software bugs, or natural disasters that disrupt security.

Example: A ransomware threat that encrypts critical files and demands payment for their release.

Threat Actor

A threat actor is the entity responsible for carrying out an attack. Threat actors include hackers, cybercriminal groups, nation-state attackers, and even insiders with malicious intent.

Example: The Lazarus Group, a North Korean cyber-espionage team responsible for various high-profile attacks.

Threat categories

Threats fall into four broad categories:

Disclosure: Unauthorized access to data, which covers exposure, interception, interference, and intrusion. This includes stealing data, improperly making data available to others, or snooping on the flow of data.

Deception: Accepting false data as true. This includes masquerading, which is posing as an authorized entity; substitution or insertion of includes the injection of false data or modification of existing data; repudiation, where someone falsely denies receiving or originating data.

Disruption: Some change that interrupts or prevents the correct operation of the system. This can include maliciously changing the logic of a program, a human error that disables a system, an electrical outage, or a failure in the system due to a bug. It can also refer to any obstruction that hinders the functioning of the system.

Usurpation: Unauthorized control of some part of a system. This includes theft of service as well as any misuse of the system such as tampering or actions that result in the violation of system privileges.

Network threats

The Internet increases opportunities for attackers. The core protocols of the Internet were designed with decentralization, openness, and interoperability in mind rather than security. Anyone can join the Internet and send messages … and untrustworthy entities can provide routing services. It allows bad actors to hide and to attack from a distance. It also allows attackers to amass asymmetric force: harnessing more resources to attack than the victim has for defense. Even small groups of attackers are capable of mounting Distributed Denial of Service (DDoS) attacks that can overwhelm large companies or government agencies by assembling a botnet of tens or hundreds of thousands of compromised computers.

Threat actors

Adversaries can range from lone hackers to industrial spies, terrorists, and intelligence agencies. We can consider two dimensions: skill and focus.

Regarding focus, attacks are either opportunistic or targeted.

Opportunistic attacks are those where the attacker is not out to get you specifically but casts a wide net, trying many systems in the hope of finding a few that have a particular vulnerability that can be exploited. Targeted attacks are those where the attacker targets you specifically.

In the dimension of skill, the term script kiddies is used to refer to attackers who lack the skills to craft their own exploits but download malware toolkits to try to find vulnerabilities (e.g., systems with poor or default passwords, hackable cameras). They can still cause substantial damage.

Advanced persistent threats (APT) are highly-skilled, well-funded, and determined (hence, persistent) attackers. They can craft their own exploits, pay millions of dollars for others, and may carry out complex, multi-stage attacks.

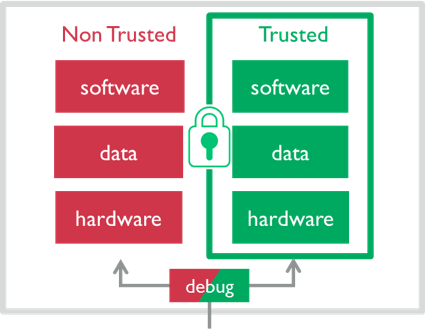

Trusted computing base

The Trusted Computing Base (TCB) consists of the hardware, software, and firmware that enforce a system’s security policies. If the TCB is compromised, you lose assurance that any part of the system remains secure. For example, if an attacker modifies the operating system to ignore file access permissions, applications running on the system can no longer be trusted to enforce security rules. A compromised TCB can allow unauthorized access, privilege escalation, or persistent backdoors, making security controls ineffective.

The computing supply chain is crucial to TCB security, as modern systems rely on globally sourced and third-party components – both hardware and software. A compromised supply chain—whether through malicious firmware, counterfeit hardware, or backdoored software—can introduce vulnerabilities before a system is even deployed. Examples include the SolarWinds breach and hardware-level implants, which demonstrated how attackers can infiltrate systems at a fundamental level. If an attacker compromises the supply chain, even secure software running on top of the system cannot be trusted. To prevent such risks, organizations implement secure sourcing, vendor audits, firmware integrity checks, and hardware attestation to ensure the trustworthiness of the computing infrastructure.

Cryptography

Cryptography deals with encrypting plaintext using a cipher, also known as an encryption algorithm, to create ciphertext, which is unintelligible to anyone unless they can decrypt the ciphertext. It is a tool that helps build protocols that address:

- Authentication

- Showing that the user really is that user.

- Integrity:

- Validating that the message has not been modified.

- Nonrepudiation:

- Binding the origin of a message to a user so that she cannot deny creating it.

- Confidentiality:

- Hiding the contents of a message.

A secret cipher is one where the workings of the cipher must be kept secret. There is no reliance on any key and the secrecy of the cipher is crucial to the value of the algorithm. This has obvious flaws: people in the know leaking the secret, designers coming up with a poor algorithm, and reverse engineering. Schneier’s Law (not a real law), named after Bruce Schneier, a cryptographer and security professional, suggests that anyone can invent a cipher that they will not be able to break, but that doesn’t mean it’s a good one.

For any serious use of encryption, we use well-tested, non-secret algorithms that rely on secret keys. A key is a parameter to a cipher that alters the resulting ciphertext. Knowledge of the key is needed to decrypt the ciphertext. Kerckhoffs’s Principle states that a cryptosystem should be secure even if everything about the system, except the key, is public knowledge. We expect algorithms to be publicly known and all security to rest entirely on the secrecy of the key.

A symmetric encryption algorithm uses the same secret key for encryption and decryption.

An alternative to symmetric ciphers are asymmetric ciphers. An asymmetric, or public key cipher uses two related keys. Data encrypted with one key can only be decrypted with the other key.

Properties of good ciphers

These are the key properties we expect for a cipher to be strong:

- For a cipher to be considered good, ciphertext should be indistinguishable from random values.

- Given ciphertext, there should be no way to extract the original plaintext or the key that was used to create it except by of enumerating over all possible keys. This is called a brute-force attack.

- The keys used for encryption should be large enough that a brute force attack is not feasible. Each additional bit in a key doubles the number of possible keys and hence doubles the search time.

Stating that the ciphertext should be indistinguishable from random values implies high entropy. Shannon entropy measures the randomness in a system. It quantifies the unpredictability of cryptographic keys and messages, with higher entropy indicating more randomness. Low entropy would allow an attacker to find patterns or some correlation to the original content.

We expect these properties for a cipher to be useful:

The secrecy of the cipher should be entirely in the key (Kerckoffs’s principle) – we expect knowledge of the algorithm to be public.

Encryption and decryption should be efficient: we want to encourage the use of secure cryptography where it is needed and not have people avoid it because it slows down data access.

Keys and algorithms should be as simple as possible and operate on any data:

- There shouldn’t be restrictions on the values of keys, the data that could be encrypted, or how to do the encryption

- Restrictions on keys make searches easier and will require longer keys.

- Complex algorithms will increase the likelihood of implementation errors.

- Restrictions on what can be encrypted will encourage people to not use the algorithm.

The size of the ciphertext should be the same size as the plaintext.

- You don’t want your effective bandwidth cut in half because the ciphertext is 2x the size of plaintext.

- However, sometimes we might need to pad the data but that’s a small number of bytes regardless of the input size.

The algorithm has been extensively analyzed

- We don’t want the latest – we want an algorithm that has been studied carefully for years by many experts.

In addition to formulating the measurement of entropy, Claude Shannon posited that a strong cipher should, ideally, have the confusion and diffusion as goals in its operation.

Confusion means that there is no direct correlation between a bit of the key and the resulting ciphertext. Every bit of ciphertext will be impacted by multiple bits of the key. An attacker will not be able to find a connection between a bit of the key and a bit of the ciphertext. This is important in not giving the cryptanalyst hints on what certain bits of the key might be and thus limit the set of possible keys. Confusion hides the relationship between the key and ciphertext

Diffusion is the property where the plaintext information is spread throughout the cipher so that a change in one bit of plaintext will change, on average, half of the bits in the ciphertext. Diffusion tries to make the relationship between the plaintext and ciphertext as complicated as possible.

Classic cryptography

Monoalphabetic substitution ciphers

The earliest form of cryptography was the monoalphabetic substitution cipher. In this cipher, each character of plaintext is substituted with a character of ciphertext based on a substitution alphabet (a lookup table). The simplest of these is the Caesar cipher, known as a shift cipher, in which a plaintext character is replaced with a character that is n positions away in the alphabet. The key is the simply the the shift value: the number n. Substitution ciphers are vulnerable to frequency analysis attacks, in which an analyst analyzes letter frequencies in ciphertext and substitutes characters with those that occur with the same frequency in natural language text (e.g., if “x” occurs 12% of the time, it’s likely to really be an “e” since “e” occurs in English text approximately 12% of the time while “x” occurs only 0.1% of the time).

Polyalphabetic substitution ciphers

Polyalphabetic substitution ciphers were designed to increase resiliency against frequency analysis attacks. Instead of using a single plaintext to ciphertext mapping for the entire message, the substitution alphabet may change periodically. Leon Battista Alberti is credited with creating the first polyalphabetic substitution cipher. In the Alberti cipher (essentially a secret decoder ring), the substitution alphabet changes every n characters as the ring is rotated one position every n characters.

The Vigenère cipher is a grid of Caesar ciphers that uses a repeating key. A repeating key is a key that repeats itself for as long as the message. Each character of the key determines which Caesar cipher (which row of the grid) will be used for the next character of plaintext. The position of the plaintext character identifies the column of the grid. These algorithms are still vulnerable to frequency analysis attacks but require substantially more plaintext since one needs to deduce the key length (or the frequency at which the substitution alphabet changes) and then effectively decode multiple monoalphabetic substitution ciphers.

One-time Pads

The one-time pad is the only provably secure cipher. It uses a random key that is as long as the plaintext. Each character of plaintext is permuted by a character of ciphertext (e.g., add the characters modulo the size of the alphabet or, in the case of binary data, exclusive-or the next byte of the text with the next byte of the key). The reason this cryptosystem is not particularly useful is because the key has to be as long as the message, so transporting the key securely becomes a problem. The challenge of sending a message securely is now replaced with the challenge of sending the key securely. The position in the key (pad) must by synchronized at all times. Error recovery from unsynchronized keys is not possible. Finally, for the cipher to be secure, a key must be composed of truly random characters, not ones derived by an algorithmic pseudorandom number generator. The key can never be reused.

The one-time pad provides perfect secrecy (not to be confused with forward secrecy, also called perfect forward secrecy, which will be discussed later), which means that the ciphertext conveys no information about the content of the plaintext. It has been proved that perfect secrecy can be achieved only if there are as many possible keys as the plaintext, meaning the key has to be as long as the message. Watch this video for an explanation of perfect secrecy.

Stream ciphers

A stream cipher simulates a one-time pad by using a keystream generator to create a set of key bytes that is as long as the message. A keystream generator is a pseudorandom number generator that is seeded, or initialized, with a key that drives the output of all the bytes that the generator spits out. The keystream generator is fully deterministic: the same key will produce the same stream of output bytes each time. Because of this, receivers only need to have the key to be able to decipher a message. However, because the keystream generator does not generate true random numbers, the stream cipher is not a true substitute for a one-time pad. Its strength rests on the strength of the key. A keystream generator will, at some point, will reach an internal state that is identical to some previous internal state and produce output that is a repetition of previous output. This also limits the security of a stream cipher but the repetition may not occur for a long time, so stream ciphers can still be useful for many purposes.

Rotor machines

A rotor machine is an electromechanical device that implements a polyalphabetic substitution cipher. It uses a set of disks (rotors), each of which implements a substitution cipher. The rotors rotate with each character in the style of an odometer: after a complete rotation of one rotor, the next rotor advances one position. Each successive character gets a new substitution alphabet applied to it. The multi-rotor mechanism allows for a huge number of substitution alphabets to be employed before they start repeating when the rotors all reach their starting position. The number of alphabets is cr, where c is the number of characters in the alphabet and r is the number of rotors.

Transposition ciphers

Instead of substituting one character of plaintext for a character of ciphertext, a transposition cipher scrambles the position of the plaintext characters. Decryption is the knowledge of how to unscramble them.

A scytale, also known as a staff cipher, is an ancient implementation of a transposition cipher where text written along a strip of paper is wrapped around a rod and the resulting sequences of text are read horizontally. This is equivalent to entering characters in a two-dimensional matrix horizontally and reading them vertically. Because the number of characters might not be a multiple of the width of the matrix, extra characters might need to be added at the end. This is called padding and is essential for block ciphers, which encrypt chunks of data at a time.

Block ciphers

Most modern ciphers are block ciphers, meaning that they encrypt a chunk of bits, or block, of plaintext at a time. The same key is used to encrypt each successive block of plaintext.

AES and DES are two popular symmetric block ciphers. Symmetric block ciphers are usually implemented as iterative ciphers. The encryption of each block of plaintext iterates over several rounds. Each round uses a subkey, which is a key generated from the main key via a specific set of bit replications, inversions, and transpositions. The subkey is also known as a round key since it is applied to only one round, or iteration. This subkey determines what happens to the block of plaintext as it goes through a substitution-permutation (SP) network. The SP network, guided by the subkey, flips some bits by doing a substitution, which is a table lookup of an input bit pattern to get an output bit pattern and a permutation, which is a scrambling of bits in a specific order. The output bytes are fed into the next round, which applies a substitution-permutation step onto a different subkey. The process continues for several rounds (16 rounds for DES, 10–14 rounds for AES). and the resulting bytes are the ciphertext for the input block.

The iteration through multiple SP steps creates confusion and diffusion. Confusion means that it is extremely difficult to find any correlation between a bit of the ciphertext with any part of the key or the plaintext. A core component of block ciphers is the s-box, which converts n input bits to m output bits, usually via a table lookup. The purpose of the s-box is to add confusion by altering the relationship between the input and output bits.

Diffusion means that any changes to the plaintext are distributed (diffused) throughout the ciphertext so that, on average, half of the bits of the ciphertext would change if even one bit of plaintext is changed.

Feistel ciphers

A Feistel cipher is a form of block cipher that uses a variation of the SP network where a block plaintext is split into two parts. The substitution-permutation round is applied to only one part. That output is then XORed with the other part and the two halves are swapped. At each round, half of the input block remains unchanged. DES, the Data Encryption Standard, is an example of a Feistel cipher. AES, the Advanced Encryption Standard, is not.

DES

Two popular symmetric block ciphers are DES, the Data Encryption Standard, and AES, the Advanced Encryption Standard. DES was adopted as a federal standard in 1976 and is a block cipher based on the Feistel cipher that encrypts 64-bit blocks using a 56-bit key.

DES has been shown to have some minor weaknesses against cryptanalysis. Key can be recovered using 247 chosen plaintexts or 243 known plaintexts. Note that this is not a practical amount of data to get for a real attack. The real weakness of DES is not the algorithm but but its 56-bit key. An exhaustive search requires 255 iterations on average (we assume that, on average, the plaintext is recovered halfway through the search). This was a lot for computers in the 1970s but is not much of a challenge for today’s dedicated hardware or distributed efforts.

Triple-DES

Triple-DES (3DES) solves the key size problem of DES and allows DES to use keys up to 168 bits. It does this by applying three layers of encryption:

- C' = Encrypt M with key K1

- C'' = Decrypt C' with key K2

- C = Encrypt C'' with key K3

If K1, K2, and K3 are identical, we have the original DES algorithm since the decryption in the second step cancels out the encryption in the first step. If K1 and K3 are the same, we effectively have a 112-bit key and if all three keys are different, we have a 168-bit key.

Cryptanalysis is not effective with 3DES: the three layers of encryption use 48 rounds instead of 16 making it infeasible to reconstruct the substitutions and permutations that take place. A 168-bit key is too long for a brute-force attack. However, DES is relatively slow compared with other symmetric ciphers, such as AES. It was designed with hardware encryption in mind. 3DES is, of course, three times slower than DES.

AES

AES, the Advanced Encryption Standard, was designed as a successor to DES and became a federal government standard in 2002. It uses a larger block size than DES: 128 bits versus DES’s 64 bits and supports larger key sizes: 128, 192, and 256 bits. Even 128 bits is complex enough to prevent brute-force searches.

No significant academic attacks have been found thus far beyond brute force search. AES is also typically 5–10 times faster in software than 3DES.

Block cipher modes

Electronic Code Book (ECB)

When data is encrypted with a block cipher, it is broken into blocks and each block is encrypted separately. This leads to two problems.

If different encrypted messages contain the same substrings and use the same key, an intruder can deduce that it is the same data.

Secondly, a malicious party can delete, add, or replace blocks (perhaps with blocks that were captured from previous messages).

This basic form of a block cipher is called an electronic code book (ECB). Think of the code book as a database of encrypted content. You can look up a block of plaintext and find the corresponding ciphertext. This is not feasible to implement for arbitrary messages but refers to the historic use of codebooks to convert plaintext messages to ciphertext.

Cipher Block Chaining (CBC)

Cipher block chaining (CBC) addresses these problems. Every block of data is still encrypted with the same key. However, prior to being encrypted, the data block is exclusive-ORed with the previous block of ciphertext. The receiver does the process in reverse: a block of received data is decrypted and then exclusive-ored with the previously-received block of ciphertext to obtain the original data. The very first block is exclusive-ored with a random initialization vector, which must be transmitted to the remote side.

Note that CBC does not make the encryption more secure; it simply makes the result of each block of data dependent on all previous previous blocks. Because of the random initialization vector, even identical content would appear different in ciphertext. An attacker would not be able to tell if any two blocks of ciphertext refer to identical blocks of plaintext. Because of the chaining, even identical blocks in the same ciphertext will appear vastly different. Moreover, because of this blocks cannot be meaningfully inserted, swapped, or deleted in the message stream without the decryption failing (producing random-looking garbage).

Counter mode (CTR)

Counter mode (CTR) also addresses these problems but in a different way. The ciphertext of each block is a function of its position in the message. Encryption starts with a message counter. The counter is incremented for each block of input. Only the counter is encrypted. The resulting ciphertext is then exclusive-ORed with the corresponding block of plaintext, producing a block of message ciphertext. To decrypt, the receiver does the same thing and needs to know the starting value of the counter as well as the key.

An advantage of CTR mode is that each block has no dependance on other blocks and encryption on multiple blocks can be done in parallel.

Cryptanalysis

The goal of cryptanalysis is break codes. Most often, it is to identify some non-random behavior of an algorithm that will give the analyst an advantage over an exhaustive search of the key space.

Differential cryptanalysis seeks to identify non-random behavior by examining how changes in plaintext input affect changes in the output ciphertext. It tries to find whether certain bit patterns are unlikely for certain keys or whether the change in plaintext results in likely changes in the output.

Linear cryptanalysis tries to create equations that attempt to predict the relationships between ciphertext, plaintext, and the key. An equation will never be equivalent to a cipher but any correlation of bit patterns give the analyst an advantage.

Neither of these methods will break a code directly but may help find keys or data that are more likely are that are unlikely. It reduces the keys that need to be searched.

Public key cryptography

Public key algorithm, also known as asymmetric ciphers, use one key for encryption and another key for decryption. One of these keys is kept private (known only to the creator) and is known as the private key. The corresponding key is generally made visible to others and is known as the public key.

Anything encrypted with the private key can only be decrypted with the public key. This is the basis for digital signatures. Anything that is encrypted with a public key can be encrypted only with the corresponding private key. This is the basis for authentication and covert communication.

Public and private keys are related but, given one of the keys, there is no feasible way of computing the other. They are based on trapdoor functions, which are one-way functions: there is no known way to compute the inverse unless you have extra data: the other key.

RSA public key cryptography

The RSA algorithm is the most popular algorithm for asymmetric cryptography. Its security is based on the difficulty of finding the factors of the product of two large prime numbers. Unlike symmetric ciphers, RSA encryption is a matter of performing arithmetic on large numbers. It is also a block cipher and plaintext is converted to ciphertext by the formula:

c = me mod n

Where m is a block of plaintext, e is the encryption key, and n is an agreed-upon modulus that is the product of two primes. To decrypt the ciphertext, you need the decryption key, d:

m = cd mod n

Given the ciphertext c, e, and n, there is no efficient way to compute the inverse to obtain m. Should an attacker find a way to factor n into its two prime factors, however, the attacker would be able to reconstruct the encryption and decryption keys, e and d.

Elliptic curve cryptography (ECC)

Elliptic curve cryptography (ECC) is a more recent public key algorithm that is an alternative to RSA. It is based on finding points along a prescribed elliptic curve, which is an equation of the form:

y2 = x3 + ax + b

Contrary to its name, elliptic curves have nothing to do with ellipses or conic sections and look like bumpy lines. With elliptic curves, multiplying a point on a given elliptic curve by a number will produce another point on the curve. However, given that result, it is difficult to find what number was used. The security in ECC rests not our inability to factor numbers but our inability to perform discrete logarithms in a finite field.

The RSA algorithm is still the most widely used public key algorithm, but ECC has some advantages:

ECC can use far shorter keys for the same degree of security. Security comparable to 256 bit AES encryption requires a 512-bit ECC key but a 15,360-bit RSA key

ECC requires less CPU consumption and uses less memory than RSA. It is faster for encryption (including signature generation) than RSA but slower for decryption.

Generating ECC keys is faster than RSA (but much slower than AES, where a key is just a random number).

On the downside, ECC is more complex to implement and decryption is slower than with RSA. As a standard, ECC was also tainted because the NSA inserted weaknesses into the ECC random number generator that effectively created a backdoor for decrypting content. This has been remedied and ECC is generally considered the preferred choice over RSA for most applications.

If you are interested, see here for a somewhat easy-to-understand tutorial on ECC.

Quantum computing

Quantum computers are a markedly different form computer. Conventional computers store and process information that is represented in bits, with each bit having a distinct value of 0 or 1. Quantum computers use the principles of quantum mechanics, which include superposition and entanglement. Instead of working with bits, quantum computers operate on qubits, which can hold values of “0” and “1” simultaneously via superposiion. The superpositions of qubits can be entangled with other objects so that their final outcomes will be mathematically related. A single operation can be carried out on 2n values simultaneously, where n is the number of qubits in the computer.

While practical quantum computers don’t exist, it’s predicted that certain problems may be solved exponentially faster than with conventional computers. Shor’s algorithm, for instance, will be able to find the prime factors of large integers and compute discrete logarithms far more efficiently than is currently possible.

So far, quantum computers are very much in their infancy, and it is not clear when – or if – large-scale quantum computers that are capable of solving useful problems will be built. It is unlikely that they will be built in the next several years but we expect that they will be built eventually. Shor’s algorithm will be able to crack public-key based systems such as RSA, Elliptic Curve Cryptography, and Diffie-Hellman key exchange. In 2016, the NSA called for a migration to “post-quantum cryptographic algorithms” and has currently narrowed down the submissions to 26 candidates. The goal is to find useful trapdoor functions that do not rely on multiplying large primes, computing exponents, any other mechanisms that can be attacked by quantum computation. If you are interested in these, you can read the NSA’s report.

Symmetric cryptosystems, such as AES, are not particularly vulnerable to quantum computing since they rely on moving and flipping bits rather than applying mathematical functions on the data. The best potential attacks come via Grover’s algorithm, which yields only a quadratic rather than an exponential speedup in key searches. This will reduce the effective strength of a key by a factor of two. For instance, a 128-bit key will have the strength of a 64-bit key on a conventional computer. It is easy enough to use a sufficiently long key (256-bit AES keys are currently recommended) so that quantum computing poses no threat to symmetric algorithms.

Secure communication

Symmetric cryptography

Communicating securely with symmetric cryptography is easy. All communicating parties must share the same secret key. Plaintext is encrypted with the secret key to create ciphertext and then transmitted or stored. It can be decrypted by anyone who has the secret key.

Asymmetric cryptography

Communicating securely with asymmetric cryptography is a bit different. Anything encrypted with one key can be decrypted only by the other related key. For Alice to encrypt a message for Bob, she encrypts it with Bob’s public key. Only Bob has the corresponding key that can decrypt the message: Bob’s private key.

Hybrid cryptography

Asymmetric cryptography alleviates the problem of transmitting a key over an unsecure channel. However, it is considerably slower than symmetric cryptography. AES, for example, is approximately 1,500 times faster for decryption than RSA and 40 times faster for encryption. AES is also much faster than ECC. Key generation is also far slower with RSA or ECC than it is with symmetric algorithms, where the key is just a random number rather than a set of carefully chosen numbers with specific properties. Moreover, certain keys with RSA may be weaker than others.

Because of these factors, RSA and ECC are never used to encrypt large chunks of information. Instead, it is common to use hybrid cryptography, where a public key algorithm is used to encrypt a randomly-generated key that will encrypt the message with a symmetric algorithm. This randomly-generated key is called a session key, since it is generally used for one communication session and then discarded.

Key Exchange

The biggest problem with symmetric cryptography is key distribution. For Alice and Bob to communicate, they must share a secret key that no adversaries can get. However, Alice cannot send the key to Bob since it would be visible to adversaries. She cannot encrypt it because Alice and Bob do not share a key yet.

Diffie-Hellman key exchange

The Diffie-Hellman key exchange algorithm allows two parties to establish a common key without disclosing any information that would allow any other party to compute the same key. Each party generates a private key and a public key. Despite their name, these are not encryption keys; they are just numbers. Diffie-Hellman does not implement public key cryptography. Alice can compute a common key using her private key and Bob’s public key. Bob can compute the same common key by using his private key and Alice’s public key.

Diffie-Hellman uses the one-way function abmod c. Its one-wayness is due to our inability to compute the inverse: a discrete logarithm. Anyone may see Alice and Bob’s public keys but will be unable to compute their common key. Although Diffie-Hellman is not a public key encryption algorithm, it behaves like one in the sense that it allows us to exchange keys without having to use a trusted third party.

Key exchange using public key cryptography

With public key cryptography, there generally isn’t a need for key exchange. As long as both sides can get each other’s public keys from a trusted source, they can encrypt messages using those keys. However, we rarely use public key cryptography for large messages. It can, however, be used to transmit a session key. This use of public key cryptography to transmit a session key that will be used to apply symmetric cryptography to messages is called hybrid cryptography. For Alice to send a key to Bob:

- Alice generates a random session key.

- She encrypts it with Bob’s public key & sends it to Bob.

- Bob decrypts the message using his private key and now has the session key.

Bob is the only one who has Bob’s private key to be able to decrypt that message and extract the session key. A problem with this is that anybody can do this. Charles can generate a random session key, encrypt it with Bob’s public key, and send it to Bob. For Bob to be convinced that it came from Alice, she can encrypt it with her private key (this is signing the message).

- Alice generates a random session key.

- She signs it by encrypting the key with her private key.

- She encrypts the result with Bob’s public key & sends it to Bob.

- Bob decrypts the message using his private key.

- Bob decrypts the resulting message with Alice’s public key and gets the session key.

If anybody other than Alice created the message, the result that Bob gets by decrypting it with Alice’s public key will not result in a valid key for anyone. We can enhance the protocol by using a standalone signature (encrypted hash) so Bob can identify a valid key from a bogus one.

Forward secrecy and key types

If an attacker steals Bob’s private key, they will be able to decrypt old session keys. This is because, at the start of every communication session with Bob, the session key is typically encrypted using Bob’s public key. Once the attacker has Bob’s private key, they can retroactively decrypt these session keys and access past communications.

Forward Secrecy

Forward secrecy, also known as perfect forward secrecy (PFS), ensures that the compromise of a long-term key (e.g., Bob’s private key) does not compromise past session keys. This means there is no secret that, if stolen, allows an attacker to decrypt multiple past messages.

Forward secrecy is valuable for communication sessions but not for stored encrypted documents. In communications, the goal is to prevent an attacker from retroactively decrypting old conversations, even if they later obtain a user’s private key. However, encrypted documents must remain decryptable by the legitimate user, which requires reliance on a long-term key.

Diffie-Hellman and Forward Secrecy

Diffie-Hellman key exchange enables forward secrecy by allowing Alice and Bob to generate temporary (ephemeral) key pairs for each session. The process works as follows:

- Alice and Bob each generate a new public-private key pair and exchange their public keys.

- Using their own private key and the received public key, they compute a shared secret.

- This shared secret is used to encrypt their communication for that session.

- For the next session, they generate new key pairs and derive a new shared secret.

Since a new set of keys is used for every session, even if an attacker later compromises Bob’s private key, they cannot decrypt past messages because those sessions used different keys.

In contrast, encrypting a session key with a long-term key (such as Bob’s public key in an RSA-based system) does not provide forward secrecy. If an attacker gains access to Bob’s private key, they can decrypt all past session keys and decrypt old communications.

Types of Cryptographic Keys

- Long-Term Keys

- These keys persist across multiple sessions and are used for authentication, identity verification, and encrypting stored data. Examples include RSA or ECC private keys used to create digital signatures and digital certificates.

- Ephemeral Keys: These are temporary, single-use keys generated for a session and discarded afterward. Diffie-Hellman and Elliptic Curve Diffie-Hellman are examples used to achieve forward secrecy.

- Session Keys: These are symmetric encryption keys used for a single communication session. They are often derived from ephemeral key exchanges and are used to encrypt messages between parties for the duration of the session.

Ephemeral keys are discarded as soon as a session key is established. Session keys can be discarded when the communication session ends.

Diffie-Hellman is particularly useful for achieving forward secrecy because it allows efficient on-the-fly key pair generation. While RSA or ECC keys could theoretically be used for ephemeral key exchange, key generation for RSA and ECC is computationally expensive. As a result, RSA and ECC keys are typically used as long-term keys (e.g., for authentication and digital signatures) rather than for generating new session keys dynamically.

Message Integrity

One-way and Trapdoor Functions

A one-way function is easy to compute but infeasible to invert. Given an output, finding the original input is computationally impractical. These functions are the foundation of cryptographic hash functions (e.g., SHA-256), which ensure data integrity, password security, and digital signatures.

A trapdoor function is a one-way function with a secret trapdoor that allows efficient inversion. These functions are fundamental to public-key cryptography, including Diffie-Hellman, RSA, and ECC, enabling secure encryption, decryption, and digital signatures.

| Feature | One–Way Function | Trapdoor Function |

|---|---|---|

| Inversion | Impossible | Possible with secret trapdoor |

| Key Use | Hash functions | Public-key cryptography |

| Security Role | Integrity & authentication | Encryption & key exchange |

One-way functions secure hash-based applications, while trapdoor functions enable asymmetric encryption.

Hash functions

A particularly important class of one-way functions is the cryptographic hash function. These functions produce a fixed-size output, regardless of the input size, making them invaluable in various applications. In general computing, hash functions are often used to build hash tables, enabling O(1) key lookups.

However, cryptographic hash functions differ from standard hash functions in that they generate significantly longer outputs—typically 224, 256, 384, or 512 bits. Strong cryptographic hash functions, such as SHA-2 and SHA-3 families, must exhibit several essential properties:

Fixed-Length Output – Like all hash functions, cryptographic hash functions take an input of arbitrary length and produce a fixed-size output.

Deterministic – They always produce the same hash for the same input, ensuring consistency

Pre-image Resistance (Hiding) – Given a hash value H, it should be computationally infeasible to determine the original input M such that H = hash(M).

Avalanche Effect – The output of a hash function should not give any information about any part of the input. Small changes in the input should result in significantly different hash outputs, preventing any predictable relationship between input and output. For example, changing a byte in the message should result in a completely different hash result, with no ability to predict which bits would flip. The avalanche effect is the rsult of good diffusion.

Collision Resistance – While hash collisions must theoretically exist (due to the pigeonhole principle), it should be infeasible to find two distinct inputs that produce the same hash. Likewise (see item 4, above), modifying a message should alter the hash in an unpredictable way.

Efficient – Hash functions should be computationally efficient, allowing rapid generation of hashes for applications like message integrity verification without excessive overhead.

Cryptographic hash functions form the foundation of message authentication codes (MACs) and digital signatures, playing a crucial role in ensuring data integrity and authentication.

Due to their properties, we can be highly confident that even the smallest modification to a message will produce a completely different hash. However, the “holy grail” for an attacker is finding a way to create a different, but useful, message that hashes to the same value as a legitimate one. Such an attack could allow message substitution, potentially leading to serious consequences—such as redirecting a financial transaction.

Finding a collision for a specific, known message (pre-image attack) is significantly harder than finding any two different messages that hash to the same value (collision attack). The birthday paradox explains why: the probability of finding any collision is approximately proportional to the square root of the total number of possible hashes. As a result, the security strength of a hash function against brute-force collision attacks is roughly half the number of bits in the hash output. For example, a 256-bit hash function provides approximately 128-bit security against such attacks.

Common cryptographic hash functions include:

- SHA-1 (160-bit output) – now considered weak due to known vulnerabilities.

- SHA-2 (e.g., SHA-256, SHA-512) – widely used and considered secure.

- SHA-3 (e.g., SHA3–256, SHA3–512) – designed as a secure alternative to SHA-2.

Message Authentication Codes (MACs)

A cryptographic hash function helps ensure message integrity by acting as a checksum, allowing detection of any modifications to a message. If a message is altered, its hash will change. However, standard hashes alone do not provide authentication—an attacker could modify both the message and its hash without detection. To address this, we use a cryptographic hash that incorporates a secret key, creating a message authentication code (MAC). Only those who possess the key can generate or verify a valid MAC.

There are two main types of MACs: hash-based and block cipher-based.

Hash-Based MAC (HMAC):

An HMAC transforms a cryptographic hash function (e.g., SHA-256) into a MAC by incorporating a secret key. The message and key are processed together, ensuring that only someone with the correct key can generate or verify the MAC. Without knowledge of the key, an attacker cannot forge a valid MAC, even if they can see previous message-MAC pairs.

Block Cipher-Based MAC (CBC-MAC):

Cipher Block Chaining (CBC) mode ensures that each encrypted block depends on all previous blocks. CBC-MAC leverages this by initializing encryption with a zero initialization vector (IV), encrypting the message in CBC mode, and using only the final encrypted block as the MAC. Any modification to the message propagates through the encryption process, altering the final block and invalidating the MAC.

While CBC-MAC produces a fixed-length result similar to a hash function, it relies on symmetric encryption rather than a hash function for security. Unlike HMAC, its security depends on the underlying block cipher and requires careful handling to prevent certain attacks (e.g., misuse with variable-length messages).

Digital signatures

Message authentication codes (MACs) rely on a shared secret key, meaning that anyone with the key can generate or verify a MAC. However, this does not guarantee that the original author of the message was the one who signed it—any key holder can modify and re-sign the message.

Digital signatures provide stronger guarantees than MACs:

- Only the original signer can generate a valid signature, but anyone can verify it.

- Signatures are message-specific—copying a signature to a different message invalidates it.

- Forgery is infeasible, even if an attacker has seen numerous signed messages.

A digital signature system consists of three fundamental operations:

- Key generation: {private_key, verification_key } := gen_keys(keysize)

Generates a key pair: a private key for signing and a public verification key for validation. - Signing: signature := sign(message, private_key)

Creates a digital signature using the private key. - Validation: isvalid := verify(message, signature, verification_key)

Checks whether a signature is valid using the public verification key.

Signing hashes instead of messages

Since cryptographic hashes are designed to be collision-resistant, it is common practice to sign the hash of a message rather than the message itself. This approach ensures that:

- The signature is small and fixed in size, regardless of message length.

- The signature is efficient to compute and verify.

- It integrates seamlessly into data structures that need them.

- It creates minimal transmission or storage overhead.

There are several commonly used digital signature algorithms:

- DSA, the Digital Signature Algorithm

- The current NIST standard, based on the difficulty of computing discrete logarithms.

- ECDSA, Elliptic Curve Digital Signature Algorithm

- A variant of DSA that uses elliptic curve cryptography (ECC), providing equivalent security with smaller key sizes than traditional DSA.

- Public key cryptographic algorithms

- Uses RSA encryption principles to sign message hashes. Unlike DSA and ECDSA, which are dedicated signature schemes, RSA is a general-purpose public-key cryptosystem that can be used for both encryption and signing.

All these algorithms use public and private key pairs.

We previously saw how public-key cryptography allows encryption:

- Alice encrypts a message with Bob’s public key, ensuring that only Bob can decrypt it with his private key.

Digital signatures work in a similar way, but in reverse:

- Alice “encrypts” (signs) a message hash using her private key.

- Anyone with Alice’s public key can decrypt and verify the signature, confirming that the message was signed by Alice.

Instead of encrypting the entire message, most digital signature algorithms apply a hash function first, then sign the hash using a trapdoor function (such as modular exponentiation in RSA or ECC operations in ECDSA). This ensures that only the signer can generate a valid signature, but anyone can verify it using the public key.

Unlike MACs, digital signatures provide non-repudiation—proof that a specific entity signed a message. Alice cannot deny creating a signature, as only her private key could have produced it.

Both MACs and digital signatures provide message integrity, ensuring that the message has not been altered. However, digital signatures go further by allowing anyone to verify authenticity without requiring a shared secret key.

| Property | Message Authentication Codes (MACs) | Digital Signatures |

|---|---|---|

| Key Type | Shared secret key | Public-private key pair |

| Who Can Sign? | Anyone with the key | Only the private key holder |

| Who Can Verify? | Only those with the key | Anyone with the public key |

| Non-Repudiation | No (any key holder can generate a MAC) | Yes (only the private key holder can sign) |

| Integrity Proof | Yes | Yes |

| Publicly Verifiable? | No (requires the secret key) | Yes |

Covert and authenticated messaging

We ignored the encryption of a message in the preceding discussion; our interest was assuring integrity. However, there are times when we may want to keep the message secret and validate that it has not been modified. Doing this involves sending a signature of the message along with the encrypted message.

A basic way for Alice to send a signed and encrypted message to Bob is for her to use hybrid cryptography and:

- Create a signature of the message. This is a hash of the message encrypted with her private key.

- Create a session key for encrypting the message. This is a throw-away key that will not be needed beyond the communication session.

- Encrypt the message using the session key. She will use a fast symmetric algorithm to encrypt this message.

- Package up the session key for Bob: she encrypts it with Bob’s public key. Since only Bob has the corresponding private key, only Bob will be able to decrypt the session key.

- She sends Bob: the encrypted message, encrypted session key, and signature.

Anonymous identities

A signature verification key (e.g., a public key) can be treated as an identity. You possess the corresponding private key and therefore only you can create valid signatures that can be verified with the public key. This identity is anonymous; it is just a bunch of bits. There is nothing that identifies you as the holder of the key. You can simply assert your identity by being the sole person who can generate valid signatures.

Since you can generate an arbitrary number of key pairs, you can create a new identity at any time and create as many different identities as you want. When you no longer need an identity, you can discard your private key for that corresponding public key.

Identity binding: digital certificates

While public keys provide a mechanism for asserting integrity via digital signatures, they are themselves anonymous. We’ve discussed a scenario where Alice uses Bob’s public key but never explained how she can assert that the key really belongs to Bob and was not planted by an adversary. Some form of identity binding of the public key must be implemented for you to know that you really have my public key instead of someone else’s. How does Alice really know that she has Bob’s public key?

X.509 digital certificates provide a way to do this. A certificate is a data structure that contains user information (called a distinguished name) and the user’s public key. This data structure also contains a signature of the certification authority. The signature is created by taking a hash of the rest of the data in the structure and encrypting it with the private key of the certification authority. The certification authority (CA) is responsible for setting policies of how they validate the identity of the person who presents the public key for encapsulation in a certificate.

To validate a certificate, you would hash all the certificate data except for the signature. Then you would decrypt the signature using the public key of the issuer. If the two values match, then you know that the certificate data has not been modified since it has been signed. The challenge is how to get the public key of the issuer. Public keys are stored in certificates, so the issuer would have a certificate containing its public key. This certificate can be signed by yet another issuer. This kind of process is called certificate chaining. For example, Alice can have a certificate issued by the Rutgers CS Department. The Rutgers CS Department’s certificate may be issued by Rutgers University. Rutgers University’s certificate could be issued by the State of New Jersey Certification Authority, and so on. At the very top level, we will have a certificate that is not signed by any higher-level certification authority. A certification authority that is not underneath any other CA is called a root CA. In practice, this type of chaining is rarely used. More commonly, there are hundreds of autonomous certification authorities acting as root CAs that issue certificates to companies, users, and services. The certificates for many of the trusted root CAs are preloaded into operating systems or, in some cases, browsers. See here for Microsoft’s trusted root certificate participants and here for Apple’s trusted root certificates.

Every certificate has an expiration time (often a year or more in the future). This provides some assurance that even if there is a concerted attack to find a corresponding private key to the public key in the certificate, such a key will not be found until long after the certificate expires. There might be cases where a private key might be leaked or the owner may no longer be trustworthy (for example, an employee leaves a company). In this case, a certificate can be revoked. Each CA publishes a certificate revocation list, or CRL, containing lists of certificates that they have previously issued that should no longer be considered valid. To prevent spoofing the CRL, the list is, of course, signed by the CA. Each certificate contains information on where to obtain revocation information.

The challenge with CRLs is that not everyone may check the certificate revocation list in a timely manner and some systems may accept a certificate not knowing that it was revoked. Some systems, particularly embedded systems, may not even be configured to handle CRLs.

Code signing - protecting code integrity

We have seen how hash functions are used for message integrity through message authentication codes (MACs) (which rely on a shared key) and digital signatures (which use public and private keys). The same cryptographic principles apply to code signing, a process that ensures software has not been modified since it was created by the developer.

Code signing protects against tampered or malicious software. It allows operating systems to verify software authenticity, detect unauthorized modifications, and prevent execution of compromised applications—all without requiring users to manually inspect their downloads.

Signing software allows it to be downloaded from untrusted sources or distributed over untrusted channels while still ensuring it has not been altered. It also enables the detection of malware that may have modified software after installation.

Modern operating systems such as Microsoft Windows, Apple macOS, iOS, and Android extensively use code signing to validate software authenticity and integrity.

Code signing process

- Key Pair Generation – The software publisher generates a public/private key pair.

- Certificate Issuance – The public key is included in a digital certificate, typically issued by a Certificate Authority (CA) that verifies the publisher’s identity.

- Hash Generation – The publisher computes a cryptographic hash of the software.

- Signature Creation – The hash is encrypted with the private key, producing a digital signature.

- Attaching the Signature – The signature and the certificate are embedded in the software package to allow verification.

Code verification process

- Certificate Validation – Before installation, the system checks the certificate’s validity by ensuring it was issued by a trusted CA and has not been revoked or expired.

- Hash Computation – The system generates a new hash from the downloaded software.

- Signature Decryption – The digital signature is decrypted using the publisher’s public key, revealing the original hash.

- Integrity Check – The system compares the decrypted hash with the newly computed hash. If they match, the software is authentic and unmodified. If they do not match, this indicates tampering or corruption, and the software may be rejected as untrusted.

Some signed software, particularly system-critical applications, also supports per-page hashing. In demand paging, an operating system loads only the necessary portions (pages) of a program into memory as needed rather than loading the entire executable at once. Instead of verifying a large file before execution, per-page hashing allows each 4KB page to be individually validated upon loading. This ensures integrity at runtime, helping detect in-memory modifications or tampering even after installation.

Authentication

Authentication is the process of verifying that a user’s claimed identity is legitimate.

It is important to distinguish authentication from identification:

- Identification is the act of claiming an identity (e.g., entering a username or presenting an ID).

- Authentication is the process of proving that the claimed identity is valid (e.g., by providing a correct password, fingerprint, or security token).

Authorization is a separate process that determines what actions or resources an authenticated user is permitted to access.

Authentication factors

The three factors of authentication are:

- something you have – a physical object, such as a key, smart card, or security token.

- something you know – a secret, such as a password, PIN, or security answer.

- something you are – a biological trait, such as a fingerprint, retina scan, or facial recognition.

Using multi-factor authentication (MFA) enhances security by requiring authentication from two or more different factors. This ensures that even if one factor is compromised, unauthorized access remains difficult.

Importantly, MFA requires factors from different categories. Using two passwords or two security questions does not qualify as multi-factor authentication because both fall under “Something You Know.”

Combined authentication and key exchange protocols

Key exchange and authentication using a trusted third party

When two parties want to communicate securely using symmetric encryption, they need to share a common key. There are three primary ways to achieve this:

- Pre-shared Key Exchange – The key is exchanged outside the network using a secure method, such as reading it over the phone or physically delivering it on a flash drive.

- Public Key Cryptography – The key is securely exchanged using asymmetric encryption (e.g., RSA or Diffie-Hellman).

- Trusted Third Party (TTP) Key Exchange – A centralized authority manages and distributes keys to authenticated users.

A trusted third party (TTP) is a system that securely holds each participant’s secret key. In this model, Alice and the TTP (Trent) share Alice’s secret key. Likewise, Bob and Trent share Bob’s secret key.

The simplest way of using a trusted third party is to ask it to come up with a session key and send it to the parties that wish to communicate. For example:

- Alice requests a session key from Trent to communicate with Bob. This request is encrypted with Alice’s secret key, ensuring that Trent knows it came from Alice.

- Trent generates a random session key and encrypts it into two messages: One copy is encrypted with Alice’s secret key. Another copy is encrypted with Bob’s secret key.

- Trent sends both encrypted versions to Alice. Alice decrypts her copy to obtain the session key. She then forwards the encrypted session key meant for Bob to Bob.

- Bob decrypts his copy using his secret key. Now both Alice and Bob share the session key for secure communication.

This simple scheme is vulnerable to replay attacks. An eavesdropper, Eve, can record messages from Alice to Bob and replay them at a later time. Eve might not be able to decode the messages but she can confuse Bob by sending him seemingly valid encrypted messages.

The second problem is that Alice sends Trent an encrypted session key but Trent has no idea that Alice is requesting to communicate with him. While Trent authenticated Alice (simply by being able to decrypt her request) and authorized her to talk with Bob (by generating the session key), that information has not been conveyed to Bob. This problem can be solved by having Trent package information about the other party within the encrypted messages that contain the session key.

Needham-Schroeder: nonces

The Needham-Schroeder protocol improves the basic key exchange protocol by adding nonces to messages. A nonce is simply a random string – a random bunch of bits that are used to prevent replay attacks.

Step 1. Alice Requests a Session Key from Trent

Alice sends a request to Trent (the TTP), asking to establish communication with Bob. She includes a random nonce (NA) to ensure freshness of the message. Note that this request does not need to be encrypted

Step 2. Trent Responds with a Secure Session Key

Trent generates a random session key (KAB) for Alice and Bob and sends Alice the following, encrypted with Alice’s secret key:

- Alice’s ID

- Bob’s ID

- Alice’s nonce, NA

- the session key, KAB

- a ticket: a message encrypted for Bob that contains Alice’s ID and the same session key (KAB)

This entire message from Trent is encrypted with Alice’s secret key.

Step 3. Alice Validates the Message and Forwards the Ticket to Bob

Alice decrypts Trent’s response using her secret key and verifies that the nonce matches her original request, ensuring it’s not a replay attack.

She then forwards the ticket (which is encrypted for Bob and unreadable to her) to Bob.

Step 4: Bob Validates the Ticket and Extracts the Session Key

Bob decrypts the ticket using his secret key and learns:

- The session key (KAB).

- That he is communicating with Alice, as her ID is in the ticket.

- That the session key was generated by Trent, since only Trent knows Bob’s secret key and could have created the ticket.

Step 5: Bob Authenticates Alice

Bob now needs to confirm that Alice actually has the session key. He does this by sending Alice a challenge-response authentication:

- Bob generates a random nonce (NB), encrypts it with the session key (KAB), and sends it to Alice.

- Alice decrypts the nonce, subtracts 1, then encrypts the result with KAB and sends it back to Bob.

- Bob verifies that the returned value is (NB - 1), proving that Alice knows the session key.

At this point, both Alice and Bob have authenticated each other and share a secure session key for further communication.

Denning-Sacco Modification: Using Timestamps to Prevent Key Replay Attacks

A major flaw in the Needham-Schroeder protocol is its vulnerability to key replay attacks. This occurs when an attacker, Eve, captures a valid ticket. (the message from Trent that is encrypted for Bob and contains the session key) and replays it later to impersonate Alice.

How the Attack Works:

Step 1. Alice initiates a communication session with Bob by sending a ticket encrypted for Bob.

Step 2. The ticket contains:

- Alice’s ID

- Session key (KAB)

Step 3. If Eve manages to capture and later decrypt the session key (perhaps through cryptanalysis or a key compromise), she can replay the ticket to Bob.

Step 4. Since Bob has no way to distinguish between an old ticket and a new one, he accepts the session key and proceeds with authentication.

Step 5. Eve, now in possession of KAB, successfully authenticates as Alice and communicates with Bob, who mistakenly believes he is talking to Alice.

To mitigate this attack, Denning & Sacco proposed a simple but effective fix: include a timestamp inside the ticket when it is generated by Trent (the trusted third party):

When Trent creates the ticket that Alice will later forward to Bob, he encrypts it using Bob’s secret key and includes:

Alice’s ID

Session key (KAB)

A timestamp (TA)

When Bob receives the ticket, he checks the timestamp. If the timestamp is too old (e.g., outside an acceptable time window), Bob rejects the ticket, assuming it is a replay attack. If the timestamp is recent, Bob proceeds with authentication.

This fix works because old tickets cannot be reused – a previously captured ticket after the time window has expired.

Otway-Rees Protocol: Session IDs Instead of Timestamps

One challenge with the Denning-Sacco modification is that it relies on synchronized clocks across all entities. If Bob’s clock is significantly out of sync with Trent’s, he might:

- Falsely accept an old ticket, leading to a replay attack.

- Falsely reject a valid ticket, disrupting communication.

An attacker (Eve) could manipulate time synchronization by:

- Injecting fake NTP (Network Time Protocol) responses to mislead Bob’s system clock.

- Generating fake GPS signals to deceive devices relying on GPS for time synchronization.

Since time synchronization itself introduces vulnerabilities, the Otway-Rees protocol replaces timestamps with session IDs to prevent replay attacks without relying on clocks.

The steps in this protocol are:

Step 1: Alice Initiates a Communication Request

Alice sends a message to Bob that includes:

- A unique session ID (S) to track this exchange.

- Both Alice’s and Bob’s IDs (to establish who is communicating).

- A message encrypted with Alice’s secret key, containing:

- Alice’s and Bob’s IDs.

- A random nonce, r1.

- The session ID (S) (ensuring it matches throughout the exchange).

Step 2: Bob Forwards the Request to Trent (Trusted Third Party)

Bob receives Alice’s message and sends Trent:

- Alice’s original message.

- A message encrypted with Bob’s secret key, containing:

- Alice’s and Bob’s IDs** (confirming he agrees to communicate with Alice).

- A random nonce, r2.

- The same session ID (S).

Now, Trent sees the same session ID in both encrypted messages, proving:

- Alice initiated the request to talk to Bob.

- Bob agrees to talk to Alice.

- It really is Bob because Bob was able to create a message containing the session ID and encrypt it with his secret key.

Step 3: Trent Generates and Distributes the Session Key

Once Trent verifies the request, he:

- Generates a random session key (KAB) for Alice and Bob.

- Encrypts KAB along with Alice’s nonce, r1, for Alice using Alice’s secret key.

- Encrypts KAB along with Bob’s nonce, r2, for Bob using Bob’s secret key.

- Sends both encrypted session keys to Bob, along with the session ID (S).

Bob then forwards Alice’s encrypted key to her.

The Otway-Rees protocol is secure because:

- It prevents replay attacks: Even if an attacker replays an old message, the session ID must match in all encrypted exchanges.

- Does not require synchronized clocks: Eliminates the risk of time-based attacks.

- Ensures mutual agreement: Trent confirms that both Alice and Bob want to communicate.

- Incorporates nonces: Trent’s response includes nonces to prevent an attacker from injecting old session keys (even if they are cracked). The use of nonces ensures that there is no replay attack on Trent’s response even if an attacker sends a message to Bob with a new session ID and old encrypted session keys (that were cracked by the attacker).

Kerberos

Kerberos is a trusted authentication, authorization, and key exchange protocol that uses symmetric cryptography. It is based on the Needham-Schroeder protocol, incorporating the Denning-Sacco modification (timestamps) to prevent replay attacks.

When Alice wants to communicate securely with Bob (who may be another user or a service), she must first request access from Kerberos. If authorized, Kerberos provides her with two encrypted messages:

- A message encrypted with Alice’s secret key, containing:

- A session key (KAB) for secure communication with Bob.

- A ticket encrypted with Bob’s secret key (which Alice cannot read), containing:

- The same session key (KAB).

Alice forwards the ticket to Bob. When Bob decrypts it using his secret key, he:

- Confirms that Kerberos issued the ticket since only Kerberos knows his secret key.

- Obtains KAB, which he shares with Alice.

Now that both Alice and Bob have the session key, they can securely communicate by encrypting messages using KAB.

Preventing Replay Attacks: Mutual Authentication

To ensure that Alice is legitimate, she must prove she can extract the session key sent by Kerberos. She does this by:

- Generating a new timestamp (TA).

- Encrypting TA with KAB and sending it to Bob.

Bob verifies that the timestamp is recent and then authenticates himself to Alice by:

- Incrementing TA by 1.

- Encrypting the modified value with KAB and sending it back to Alice.

Since only Bob could have decrypted TA and modified it, Alice knows she is talking to the real Bob.

Avoiding frequent password prompts

Without optimization, Alice would need to enter her password every time she requests a service, since her secret key is needed to decrypt the session key for each request. Caching her key in a file would be a security risk.

To solve this, Kerberos is divided into two components:

- Authentication Server (AS):

- Handles initial user authentication.

- Issues a session key for Alice to communicate with the Ticket Granting Server (TGS).

- This session key can be cached, avoiding repeated password entry.

- Ticket Granting Server (TGS):

- Handles requests for services (e.g., accessing Bob’s server).

- Issues a new session key for Alice’s communication with the specific service.

- Provides a ticket, which Alice presents to the service instead of entering credentials again.

Authentication Protocols

In the next family of protocols, we will look at mechanisms that focus only on authentication and not key exchange.

Public key authentication